Information Technology Reference

In-Depth Information

2

200

0

ψ

CE

ψ

MSE

ψ

CE

1.5

1

150

−50

0.5

0

100

−100

−0.5

−1

50

−150

−1.5

y

e

y

−2

0

−200

−2

−1

0

1

2

0

0.2

0.4

0.6

0.8

1

0

0.2

0.4

0.6

0.8

1

(a)

(b)

(c)

0.03

0.5

0.8

ψ

ZED

ψ

ZED

ψ

ZED

0.6

0.02

0.4

0.01

0.2

0

0

0

−0.2

−0.01

−0.4

−0.02

−0.6

e

e

e

−0.03

−0.5

−0.8

−2

−1

0

1

2

−2

−1

0

1

2

−2

−1

0

1

2

(d)

(e)

(f)

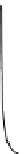

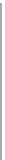

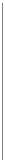

Fig. 5.5 Weight functions: a)

ψ

MSE

;b)

ψ

CE

for

t

=1

;c)

ψ

CE

for

t

=0

;d)

ψ

ZED

for

h

=0

.

1

;e)

ψ

ZED

for

h

=1

;f)

ψ

ZED

for

h

=10

We may then write formulas (5.20) to (5.22) as

∂w

=

k

ψ

(

e

i

)

∂y

i

∂R

.

∂w

Here we omit the constants 1

/n

and 1

/nh

3

from

ψ

MSE

and

ψ

ZED

,re-

spectively. This is unimportant from the point of view of optimization as one

could always multiply

R

MSE

and

R

ZED

by

n

and

nh

3

respectively without

affecting their extrema. As discussed in [214] this only affects the behav-

ior of the learning process by increasing the number of necessary epochs to

converge.

Figure 5.5 presents a comparison of the behavior of the weight functions.

From Fig. 5.5a we see that

ψ

MSE

is linear such that each error contributes

with a weight equal to its own value. Thus, larger errors are more penal-

ized contributing with a larger weight for the whole gradient. On the other

hand,

ψ

CE

confers even larger weights to larger errors. As Figs. 5.5b and 5.5c

show this weight assignment follows a hyperbolic-type rule (in contrast with

the linear rule of

ψ

MSE

). Now, for

ψ

ZED

one may distinguish three basic

behaviors:

1. If

h

is small, as in Fig. 5.5d,

ψ

ZED

(

e

)

0 for a large sub-domain of the

variable

e

. This may cause diculties for the learning process to converge

(or even start at all). In fact, it is common procedure to randomly initialize

the classifier's parameters with values close to zero, producing errors around

e

=

≈

−

1 and

e

=1. In this case, the learning process would not converge.