Information Technology Reference

In-Depth Information

1.5

1.5

f

E

(e)

f

E

(e)

e

e

0

0

−3

0

3

−3

0

3

(a)

(b)

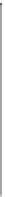

5

x

10

−3

H

1

−H

2

0

h

−5

0.25

0.3

0.35

(c)

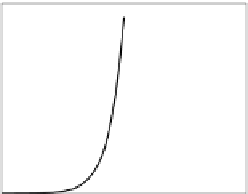

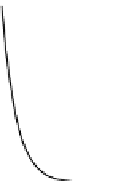

Fig. 3.29 Error PDF models (solid line) of the off-optimal (a) and optimal (b)

decision border, respectively

f

1

(

x

;4

.

6)

and

f

2

(

x

;2

.

8)

(see text). The convoluted

PDFs (dotted line) were computed with

h

=0

.

316

, the turn-about value changing

the entropy maximum at the optimal border into a minimum. The entropy difference

of the convoluted PDFs, as a function of

h

, is shown in (c) with the zero-crossing

at

h

=0

.

316

.

The maximum-to-minimum effect is general and was observed in large two-

class MLPs. It is also observed in multiclass problems for the distinct MLPs

outputs, justifying the empirical MEE ecacy in this scenario too [198].

Finally, we show that the change of error entropy behavior as a function of

h

can also be understood directly from the estimation formulas. Consider, for

simplicity, the empirical Rényi's expression, whose minimization as we know

is equivalent to the maximization of the empirical information potential

G

e

i

−

.

n

n

e

j

1

n

2

h

V

R

2

=

h

i

=1

j

=1

Let

G

ij

=

G

e

i

−e

j

h

,

c

=

1

1

n

t

h

,where

n

t

is

the number of instances from

ω

t

. Then, as

G

is symmetrical, we may write

n

2

h

,and

c

t

=

for

t

∈{−

1

,

1

}