Graphics Reference

In-Depth Information

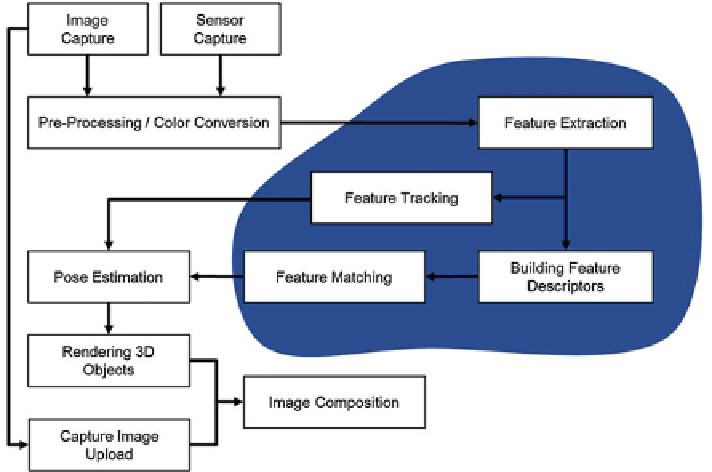

Fig. 7.14

Key hotspots highlighted in the tracking pipeline

for rendering. The captured images are stored together with other available sensor

information. These additional sensors can provide initial estimates for the camera

orientation and position. Examples would be inertial sensors and GPS. Then images

are preprocessed and converted to a suitable color space. Although color is a very

useful source of information, most feature-based systems such as SIFT [

13

], SURF

[

2

], etc., use only the image intensity today, i.e., gray value images. The blocks high-

lighted in the figure are computationally the most expensive steps in the algorithm.

Feature points are extracted from the entire image or in a scale space consisting of

several images of different resolution.

Next, a descriptor is calculated that can be used to identify the features in

databases or key frames. Features are also tracked from frame to frame by

KLT-tracking or other tracking algorithms. Finally, all the recovered information

is used for pose estimation. Now that the world coordinate system is available, 3D

objects (i.e. the virtual objects) can be rendered with a standard rendering pipeline,

e.g., utilizing a GPU and OpenGL ES. The captured images are reused for image

composition, leading to the final “Augmented Reality” impression. The augmenta-

tion is performed by rendering the virtual world superimposed on the real images.

This can be accomplished by two approaches. Typical display controllers already

offer a blending of several layers during display. The blending factor is determined

by a global or pixel-wise “alpha” blending. If this type of blending is unavailable,

OpenGL itself can also be used. Finally, the captured image is uploaded as a “texture”

that is placed onto a virtual background polygon. If scene models or depth maps are

available, occlusions between real and virtual world can also be handled with ease.