Graphics Reference

In-Depth Information

Following the depth refinement, the image pixels are recolored using the filtered

depth information (Sect.

6.3.4

). Our system currently supports recoloring of the syn-

thesized image using all

N

cameras, or selecting the color from the camera which

has the highest confidence of accurately capturing the required pixel.

In a final step, the depth map

Z

v

is also analyzed to dynamically adjust the system

and thereby avoiding heavy constraints on the user's movements. This optimization

is performed in the plane distribution control module (

movement analysis

) and, as

previously mentioned, discussed in its dedicated Sect.

6.4

.

Besides the main processing on the graphics hardware that synthesizes

I

v

,the

virtual camera

C

v

needs to be correctly positioned to restore eye contact between

the participants. An eye tracking module (Sect.

6.3.5

) thereby concurrently runs on

CPU and determines the user's eye position that will be used for correct placement

of the virtual camera at the other peer.

By sending the eye coordinates to the other peer, the input images

ʹ

1

,...,ʹ

N

do

not have to be sent over the network (Sect.

6.3.6

), but can be processed at the local

peer. This results in a minimum amount of required data communication—i.e., the

eye coordinates and the interpolated image—between the two participants.

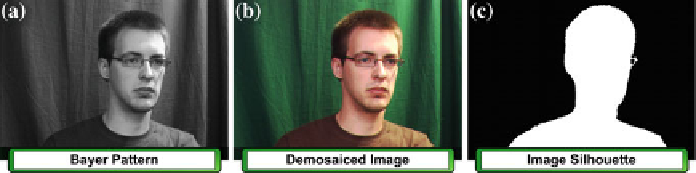

6.3.1 Preprocessing

ʹ

1

,...,ʹ

N

, i.e., the direct camera sensor inputs

(see Fig.

6.6

a). The RGB-colored images

I

1

,...,

Our system inputs Bayer patterns

I

N

are consistently computed by

using the method of [

19

], which is based on linear FIR filtering. This is depicted in

Fig.

6.6

b. Uncontrolled processing that would normally be integrated into the camera

electronics is therefore avoided, guaranteeing the system's optimal performance.

Camera lenses, certainly when targeting the low-budget range, induce a radial

distortion that is best corrected. Our system relies on the use of the

Brown-Conrady

distortion model [

3

] to easily undistort the input images on the GPU.

Each input image

I

i

with

i

is consequently segmented into a binary

foreground silhouette

S

i

(see Fig.

6.6

c), to allow the consecutive view interpolation

to adequately lever the speed and quality of the synthesis process. Two methods of

∈{

1

,...,

N

}

Fig. 6.6

The preprocessing module performs demosaicing, undistortion, and segmentation of the

input images