Database Reference

In-Depth Information

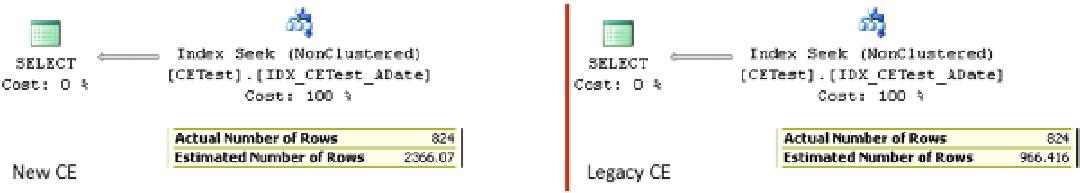

Figure 3-24.

Cardinality estimations with multiple predicates

The legacy cardinality estimator assumes the independence of predicates and uses the following formula:

(Selectivity of first predicate * Selectivity of second predicate) * (Total number of rows in table) =

(Estimated number of rows for first predicate * Estimated number of rows for second predicate) /

(Total number of rows in the table).

The new cardinality estimator expects some correlation between predicates, and it uses another approach called

an

exponential backoff algorithm

, which is as follows:

(Selectivity of most selective predicate) * SQRT(Selectivity of next most selective predicate) *

(Total number of rows in table).

This change fits entirely into “It depends” category. The legacy cardinality estimator works better when there is

no correlation between attributes/predicates, as shown in our example. The new cardinality estimator provides better

results in cases of correlation. We will look at such an example in the “Filtered Statistics” section of the next chapter.

Summary

Correct cardinality estimation is one of the most important factors that allows the Query Optimizer to generate

efficient execution plans. Cardinality estimation affects the choice of indexes, join strategies, and other parameters.

SQL Server uses statistics to perform cardinality estimations. The vital part of statistics is the histogram, which

stores information about data distribution in the leftmost statistics column. Every step in the histogram contains a

sample statistics column value and information about what happens in the interval defined by the step, such as how

many rows are in the interval, how many unique key values are there, and so on.

SQL Server creates statistics for every index defined in the system. In addition, you can create column-level

statistics on individual or multiple columns in the table. This can help SQL Server generate more efficient execution

plans. In some cases, SQL Server creates column-level statistics automatically—if the database has the

Auto Create

Statistics

database option enabled.

Statistics has a few limitations. There are at most 200 steps (key value intervals) stored in the histogram. As a

result, the histogram's steps cover larger key value intervals as the table grows. This leads to larger approximations

within the interval and less accurate cardinality estimations on tables with millions or billions of rows.

Moreover, the histogram stores the information about data distribution for the leftmost statistics column only.

There is no information about other columns in the statistics, aside from multi-column density.

SQL Server tracks the number of changes in statistics columns in the table. By default, SQL Server outdates and

updates statistics after that number exceeds about 20 percent of the total number of the rows in the table. As a result,

statistics are rarely updated automatically on large tables. You need to consider updating statistics on large tables

manually based on some schedule. You should also update statistics on ever-increasing or ever-decreasing indexes

more often because SQL Server tends to underestimate the number of rows when the parameters are outside of the

histogram, unless you are using the new cardinality estimation model introduced in SQL Server 2014.

The new cardinality estimation model is enabled in SQL Server 2014 for databases with a compatibility level of 120.

This model addresses a few common issues, such as estimations for ever-increasing indexes when statistics is not

up-to-date; however, it may introduce plan regressions in some cases. You should carefully test existing systems

before enabling the new cardinality estimation model after upgrading SQL Server.