Image Processing Reference

In-Depth Information

We know the map's localization with respect to the universe frame

T

R

map

R

u

as well as

the localization of the walls with respect to the map

T

R

wall

i

R

map

. The sensors give us the

localization of the walls with respect to the sensors

T

R

wall

i

R

sensor

j

and the calibration pro-

vides us with the localization of the sensors with respect to the frame associated with

the robot

T

R

sensor

j

R

(

k

)

. We can then infer that:

=

T

R

map

R

u

T

R

wall

i

R

map

T

R

wall

i

R

sensor

j

−

1

T

R

sensor

j

R

(

k

)

−

1

T

R

(

k

)

R

u

·

·

·

map

R

wall 2

R

wall

1

R

sensor2

R

sensor1

R(k)

Ru

R

map

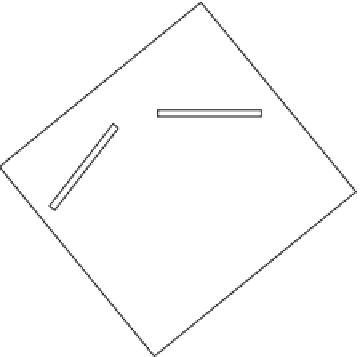

Figure 4.1.

Localization of a moving robot in its environment which is described in a map

This simple example underlines the typical problems that have to be solved in

robotics:

- the determination of transformations

T

R

sensor

j

R

(

k

)

by calibration;

- the evaluation of the transformation

T

R

wall

i

R

sensor

j

based on sensor information,

which requires signal and image processing;

- matching the “walls” that are seen with those that are known and on the map;

- the fusion of information from different sensors that see the various walls.

4.3.2.

Types and levels of representation of the environment

In order to define a strategy for action or perception, it is often necessary to rely on

an interpretation of the situation based on symbolic data with a high semantic level.

For example, we can be faced with situations such that:

- the effector is close to the pin;

Search WWH ::

Custom Search