Information Technology Reference

In-Depth Information

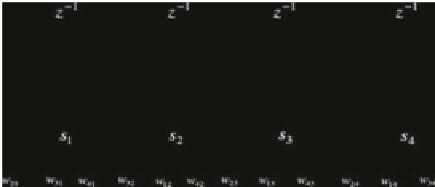

Fig. 10.1

A connectionist associative memory

memory. Moreover, it will be shown that the considered associative memory with

stochastic weights exhibits an exponential increase in the number of attractors,

comparing to conventional associative memories [

83

,

91

,

156

].

Suppose that a set of pN-dimensional vectors (

fundamental memories

)areto

be stored in a Hopfield associative memory of N neurons, as the one depicted in

Fig.

10.1

. The output of neuron j is given by s

j

D

sgn

.v

j

/; j D 1;2; ;N and

the input v

j

is given by v

j

D

P

iD1

w

ji

s

i

j

j D 1;2; ;N, where s

i

are the

outputs of the neurons connected to the j-th neuron,

j

is the threshold, and

w

ji

's

are the associated weights [

78

,

82

].

The weights of the associative memory are selected using Hebb's postulate

of learning, i.e.

w

ji

N

P

p

kD1

x

j

k

x

k

;j¤ i and

w

ji

D 0;j D i, where

i;j D 1;2; ;N. The above can be summarized in the network's correlation

weight matrix which is given by

1

D

p

X

1

N

x

k

T

x

k

W D

(10.1)

kD1

From Eq. (

10.1

) it can be easily seen that in the case of binary fundamental

memories the weights learning is given by Hebb's rule

w

ji

.n C 1/ D

w

ji

.n/ C

sgn

.x

kC1

x

kC1

/

(10.2)