Database Reference

In-Depth Information

(1950, 0)

(1950, 22)

(1950, −11)

(1949, 111)

(1949, 78)

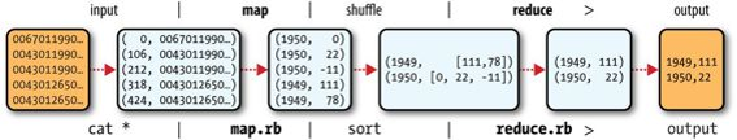

The output from the map function is processed by the MapReduce framework before be-

ing sent to the reduce function. This processing sorts and groups the key-value pairs by

key. So, continuing the example, our reduce function sees the following input:

(1949, [111, 78])

(1950, [0, 22, −11])

Each year appears with a list of all its air temperature readings. All the reduce function

has to do now is iterate through the list and pick up the maximum reading:

(1949, 111)

(1950, 22)

This is the final output: the maximum global temperature recorded in each year.

The whole data flow is illustrated in

Figure 2-1

. At the bottom of the diagram is a Unix

pipeline, which mimics the whole MapReduce flow and which we will see again later in

this chapter when we look at Hadoop Streaming.

Figure 2-1. MapReduce logical data flow

Java MapReduce

Having run through how the MapReduce program works, the next step is to express it in

code. We need three things: a map function, a reduce function, and some code to run the

job. The map function is represented by the

Mapper

class, which declares an abstract

map()

method.

Example 2-3

shows the implementation of our map function.

Example 2-3. Mapper for the maximum temperature example

import

java.io.IOException

;

import

org.apache.hadoop.io.IntWritable

;