Information Technology Reference

In-Depth Information

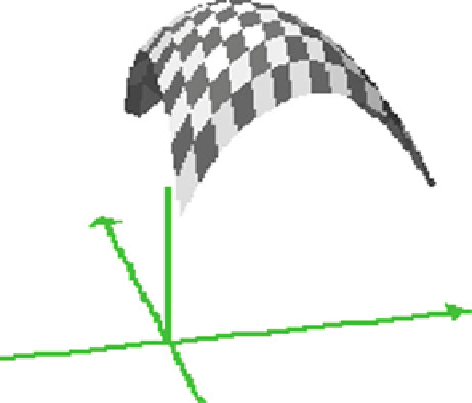

Fig. 2.3

Range of

information for three symbols

z

y

x

The only known function that satisfies these requirements is one based upon the

expected (similar to average) logarithm of the inverse probability (

p

i

) of each symbol

(

i

) thus:

p

i

log

2

p

i

Information of an event

=−

We can now define the unit of information, which has been called a 'bit', where the

two choices are equally likely thus:

1

=−

(

0

.

5log

2

(

0

.

5

)

+

0

.

5log

2

(

0

.

5

))

This information measure of a system is called

entropy,

and its behaviour for three

choices can be illustrated in Fig.

2.3

. In this graph, z represents the information value

(in bits) as the probabilities of two (x, y) of the three symbols (w, x, y) are changed.

The probability of the third symbol w is determined from the other two probabilities

because:

w

+

x

+

y

=

1

The information measure (entropy) falls to zero when the probabilities are 0 or 1,

and rises to a maximum for equal probabilities. The maximum in the zx or zy plane

is less than the maximum for a plane that includes all three dimensions zxy. The

equation for this surface is:

log

−

1

2

z

=−

[(

x

log

2

x

)

+

(

y

log

2

y

)

+

((1

−

(

x

+

y

))log

2

(1

−

(

x

+

y

))]

•

So we can say that the greater the entropy, the larger the uncertainty.