Information Technology Reference

In-Depth Information

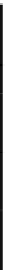

Table 1

SPA dataset basic statistics

News

Classes

No.

of

Texts

No. of Unique

Words

2-grams

3-grams

4-grams

Cultural

258

12,521

33,499

40,311

42,137

Economic

250

9,158

26,305

32,363

34,184

General

255

12,639

32,336

38,384

39,976

Political

250

9,720

26,667

32,136

33,278

Social

258

11,818

33,914

42,279

44,999

Sports

255

8,641

24,425

31,339

33,885

Total

1,526

36,497

154,240

208,390

224,903

2.2

Experimental Setup

As mentioned in Section 1, several consecutive steps must be followed in creating

the classification model. For data preprocessing, we removed stop words, which

consist primarily of function words of Arabic, such as prepositions and pronouns

[5]. In addition, we removed numbers, Latin characters, and diacritics and

normalized different forms of Hamza (إ ،أ) to (ا) and Taa Marbutah (ة) to (ﻩ). We

randomly chose 70% of the data for training and the remaining 30% for testing.

We selected single words, 2-grams, 3-grams, and 4-grams as features and chose

four of the most used FS methods: DF, chi-squared (CHI), information gain (IG),

and Galavotti, Sebastiani, Simi (GSS). The objective of FS is to order the features

according to their importance to a given class, with the most appropriate features

that distinguish the class from the other classes in the training dataset in the

highest ranks. The highest-ranked features are then selected. This selection

process yields reduced feature spaces, positively affecting CA and speed. The

mathematical representations of DF, CHI, IG, and GSS are shown in Equations

(1), (2), (3), and (4), respectively.

(,

)=(,)

(1)

,

=

|

|⋅ [

,

⋅

,

,

⋅

(

,

)]

(

)⋅

⋅

⋅(

)

(2)

(

,

)

=

∑

(

,

)

.

(,)

().()

+

∑

,

(

,

)

(3)

.()

(,

)=(,

).,

−.(,

).,

(4)

where:

m

is the number of classes,

Tr

is the total number of texts in the training dataset,

c

denotes class,

t

is a term, which could be a 1-, 2-, 3-, or 4-word level gram,

(,

)

is the number of documents in class

c

i

that contain term

t

at least once,

Search WWH ::

Custom Search