Information Technology Reference

In-Depth Information

at least once in the executions. With respect to the largest TSP instance, we note

that when the parameters take the values

p

=16and

M

=

{

16

,

32

,

48

}

those

are the settings that are more approximate to the optimal values. Probably, the

reason why this happens is that these small configurations allow the algorithmic

model iterate a greater number of times, thus generating subgroups that can

perform further exploitation. Table 3 shows clearly the difference between the

execution times of CPU and GPU implementations. GPU-DFA exhibits better

times for all instances.

25

20

25

23.09

18.41

m=16

m=32

m=48

21.85

20.36

20

20

15

17.82

17.59

15.24

14.51

15

15

10.85

13.41

12.86

9.58

12.12

10

8.85

11.13

10.82

10

10

5.91

5.05

5.42

5.43

5

4.35

4.27

4.34

3.44

5

5

3.82

3.65

2.63

0

0

0

p=16

p=32

p=48

p=16

p=32

p=48

p=16

p=32

p=48

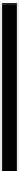

Fig. 7.

Gain time results

for

d

657

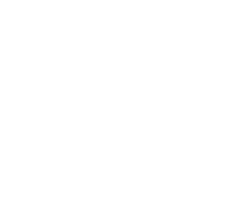

Fig. 8.

Gain time results

for

pr

1002

Fig. 6.

Gain time results

for

kroA

100

Regarding the gain of the times obtained in the TSP instances, Fig. 6, 7 and 8

show the respective values for each of those instances. We can observe the same

behaviour as the one in the DNA instances. The gain factors range between 2

to 21 (GPU-DFA faster than CPU-DFA in all the instances).

The corresponding statistical tests included in the column

ST

in Tables 2

and 3 indicates that no statistical differences exists between them (

•

symbol).

As espected, this confirms that they are the same numerical model.

As final remarks regarding our approach, these preliminary results demon-

strate that GPU-DFA obtains best results in front of CPU-DFA. However, the

numerical performance was not as good as expected, but we have confidence in

the algorithmic performance since we have not yet introduced any specific func-

tion or operator related to any of the problems in particular. We have tested

our approach with different problems and demonstrated that our approximation

supports working with different instance sizes without a huge loss in time and

solution quality by using smaller configuration parameters. The eciency of the

canonical DFA algorithm has shown that the model is perfectly suited to the

GPU architecture.

6 Conclusions and Future Work

The work presented here is based on the Discrete Firefly Algorithm on GPU

for Permutation Problems. We performed the tests over two well-known discrete

problems: TSP and DNA-FAP. The algorithm was executed in a GPU plat-

form designed for massive parallel arithmetic computing. The new algorithm is

inspired by the successful experience gathered by the FA in different fields.