Information Technology Reference

In-Depth Information

1.4

1.2

1

0.8

0.6

0.4

0.2

0

0

2

4

6

8

10

k

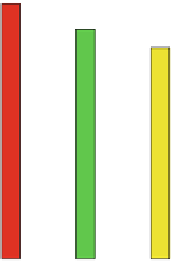

Fig. 5.4: The classification error for various values of

k

.

or 2 was identified. The feature vector was assigned to the class with the maximum

number of samples [7]. We have used nearest neighbor with a Euclidean distance

metric, considering values of 1, 3, 5, 7, and 9 for

k

. Figure 5.4 shows the classifica-

tion error for these values of

k

.

As can be seen in Fig. 5.4, the 5NN classifier outperformed other classifiers. So

we have chosen this

k

NN classifier in comparing the performance with the other

pattern recognition methods, described above.

5.5 Results

In this section we present experimental results. As discussed earlier, we experi-

mented with five classifier learning methods: FLD, SVM, GNB, correlation analy-

sis, and

k

NN. We report the performance of each classifier with various preprocess-

ing procedures mentioned in Section 5.4. By the obtained results we will be able to

decide with which preprocessing procedure the classifier has the best performance.

Performance for individual classifiers was measured by repeatedly splitting the data

into training and test sets and averaging classification performance on each test set.

Here the performance metric is a classification error. Table 5.1 shows the mean

classification error of different classifiers with different preprocessing procedures.

As can be seen, different preprocessing steps (denoising by SVD and dimension

reduction by PCA or LDA) had a substantial impact on prediction accuracy.

It is also clear that, in all experiments with different preprocessing procedures,

performance is best for the linear SVM. The mean classification error was less than

1.1% for this classifier. The different preprocessing procedure used has a weak ef-

fect on the performance of the both kNN and SVM classifiers. They approximately

perform equivalent (with classification error of less than 1.2%) and better than other