Information Technology Reference

In-Depth Information

6.4.7 A Comparison of Postsession Self-Reported Metrics

Tullis and Stetson (2004) conducted a study in which we compared a variety of

postsession questionnaires for measuring user reactions to websites in an online

usability study. We studied the following questionnaires, adapted in the manner

indicated for the evaluation of websites.

•

SUS.

It was adapted by replacing the word

system

in every question with

website

.

•

QUIS.

Three of the original rating scales that did not seem to be appro-

priate to websites were dropped (e.g., “Remembering names and use of

commands”). The term

system

was replaced with

website

, and the term

screen

was generally replaced by

web page

.

•

CSUQ.

The term

system

or

computer system

was replaced by

website.

•

Microsoft's Product Reaction Cards.

Each word was presented with a check box,

and the user was asked to choose the words that best describe their interac-

tion with the website. They were free to choose as many or as few words as

they wished.

•

Our Questionnaire.

We had been using this questionnaire for several years

in usability tests of websites. It was composed of nine positive statements

(e.g., “This website is visually appealing”), to which the user responds on

a seven-point Likert scale from “Strongly Disagree” to “Strongly Agree.”

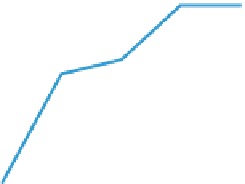

We used these questionnaires to evaluate two web portals in an online usabil-

ity study. There were a total of 123 participants in the study, with each participant

using one of the questionnaires to evaluate both websites. Participants performed

two tasks on each website before completing the questionnaire for that site. When

we analyzed the data from all the participants, we found that all five of the ques-

tionnaires revealed that Site 1 got significantly

better ratings than Site 2. Data were then ana-

lyzed to determine what the results would

have been at different sample sizes from 6 to

14, as shown in

Figure 6.13

. At a sample size

of 6, only 30 to 40% of the samples would

have identified that Site 1 was preferred sig-

nificantly. But at a sample size of 8, which is

relatively common in many lab-based usabil-

ity tests, we found that SUS would have iden-

tified Site 1 as the preferred site 75% of the

time—a significantly higher percentage than

any of the other questionnaires.

Percent of "Correct" Conclusions

by Sample Size

100%

90%

80%

70%

60%

SUS

QUIS

CSUQ

Words

Ours

50%

40%

30%

20%

10%

It's interesting to speculate why SUS

appears to yield more consistent ratings at

relatively small sample sizes. One reason

may be its use of both positive and negative

statements with which users must rate their

level of agreement. This may keep partici-

pants more alert. Another possible reason

0%

6

8

10

12

14

Sample Size

Figure 6.13 Data illustrating the accuracy of results from random

subsamples ranging from size 6 to 14. This graph shows what

percentage of the random samples yielded the same answer as the full

data set at the different sample sizes. Adapted from Tullis and Stetson

(2004); used with permission.

Search WWH ::

Custom Search