Environmental Engineering Reference

In-Depth Information

Calibration of Gamma Ray Detectors and Logs

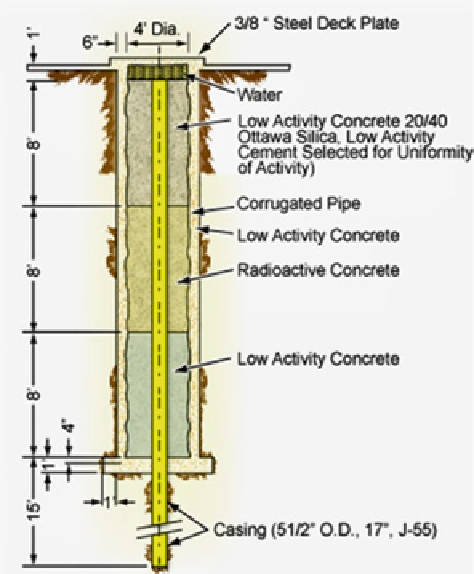

One of the problems of gamma ray logging is the choice of a standard calibration

system, since all logging companies use counters of different sizes and shapes

encased in steel housings with varying characteristics. On very old logs, the scale

might be quoted in micrograms of radium per ton of formation. For many reasons

this was found to be an unsatisfactory method of calibration for gamma ray logs, so

an API standard was devised. A test pit (installed at the University of Houston)

contains an “artificial shale,” as illustrated in Fig.

11.6

. A cylindrical artificial well,

4 ft in diameter and 24 ft deep contains a central 8-ft section consisting of cement

mixed with 13-ppm Uranium, 24 ppm Thorium, and 4 % Potassium. On either side

of this central section are 8-ft sections of neat Portland cement. This sandwich is

cased with 5½″, J55 casing. The API standard defines the difference in gamma ray

count rate between the neat cement and the radioactively doped cement as 200 API

units. Any logging service company may place its gamma ray tool in this pit to

make a calibration. Field calibration is performed using a portable jig that contains

a radioactive pill. The pill typically might be a low activity radium 226 source (e.g.,

0.1 milli-curie). When placed at a known radial distance from the center of the

gamma ray detector, it produces a known increase over the background count rate.

This increase is equivalent to a known number of API units, depending on the tool

type and size and the counter it encloses.

Fig. 11.6

API Gamma Ray

Test Pit at the University of

Houston

Search WWH ::

Custom Search