Information Technology Reference

In-Depth Information

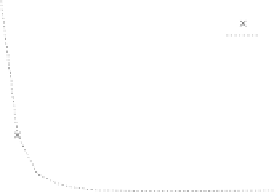

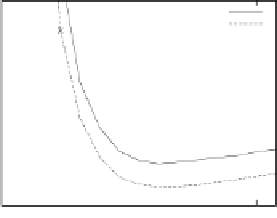

0.1

0.01

small RNN TRN

large RNN TRN

small RNN TST

large RNN TST

small RNN

large RNN

small FFNN

large FFNN

0.08

0.009

0.06

0.008

0.04

0.007

0.02

0

0.006

2

4

6

8

10

12

14

2

4

6

8

10

12

14

(a)

(b)

Iteration

Iteration

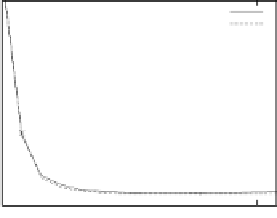

Fig. 9.10.

Mean squared error of super-resolution: (a) the recurrent network on the training

set and the test set; (b) detailed view of the test set performance, compared to FFNN.

9.3 Filling-in Occlusions

Occlusion is a major source of image degradation when capturing real-world im-

ages. Many computer vision systems fail if the object of interest is not completely

visible. In contrast, humans can recognize partially occluded objects easily. Obvi-

ously, we subconsciously fill-in the missing parts when analyzing a scene. In fact,

we are surprised when an occlusion ends and the appearing object part does not look

as expected. Johnson

et al.

[113] have shown that this holds even for infants. By 4

months of age, infants appear to perceive the unity of two rod sections extending

from behind an occluding object [112, 121].

Few attempts have been made to reproduce this behavior in computer vision

systems. One example is the approach of Dell'Acqua and Fisher [51]. They recently

proposed a method for the reconstruction of planar surfaces, such as walls, occluded

by objects in range scans. The scene is partitioned into surfaces at depth discontinu-

ities and fold edges. The surfaces are then grouped together if they lie on the same

plane. If they contain holes, these are filled using linear interpolation between the

border points.

Another example is the face recognition system described by O'Toole

et al.

[171].

They used average facial range and texture maps to complete occluded parts of an

observed face.

There are several neural models for pattern completion, including the ones

proposed by Kohonen [126] and by Hopfield [101]. Usually they work in auto-

associative mode. After some training patterns have been stored in the weights of

the network, partial or noisy versions of them can be transformed into one of the

stored patterns.

In particular, recurrent auto-associative networks can iteratively complete pat-

terns and remove noise by minimizing an energy function. In these networks, pat-

terns are stored as attractors. Bogacz and Chady [33] have demonstrated that a local

connection structure improves pattern completion in such networks.

Continuous attractor networks were proposed by Seung [209] to complete im-

ages with occlusions. For digits belonging to a common class, he trained a two-layer

Search WWH ::

Custom Search