Database Reference

In-Depth Information

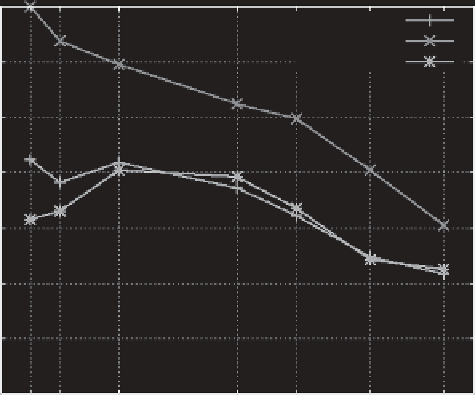

1

F-measure*

Precision*

Recall

0.9

0.8

0.7

0.6

0.5

0.4

0.3

k=2k=4

k=8

k=16

k=20

k=25

k=30

Figure 9.8 Clustering comparison by F-measure.

Attack Model

The aim of these two methods is to protect individuals in trajectory data against

the

linkage attack model

. In other words, the attack model is similar to that

described in the previous section. The only difference is that the adversary

knows that each location in the anonymized trajectories must be in the original

data. This is an important point because the linkage of a location with a specific

user could reveal the exact location rather than the generalized one. Therefore,

it is possible to identify two attacks: (a) finding an anonymized version of a

specific real trajectory; and (b) determining if a location belongs to a specific

trajectory.

Privacy-Preserving Techniques

How is it possible to guarantee that the probability of success of the attack just

described is very low while preserving the utility of the data for meaningful

analyses?

The countermeasure against the attack in point (a) uses microaggre-

gation to partition the set of trajectories into several clusters, by minimizing

the sum of the intracluster distances. The cardinality of each cluster must be

between

k

+

1and2

k

−

1. The purpose of setting

k

as the cluster size is to obtain

trajectory

k

-anonymity. Given a cluster, the algorithm takes a random trajectory

and attempts to cluster each unswapped location

l

of this trajectory with another

k

−

1 unswapped locations. These locations must belong to different trajectories

and the following properties have to be satisfied: (1) the time stamps of these

locations differ by no more than a specific time threshold; (2) the spatial coor-

dinates differ by no more than a space threshold. Given a cluster, random swaps