Database Reference

In-Depth Information

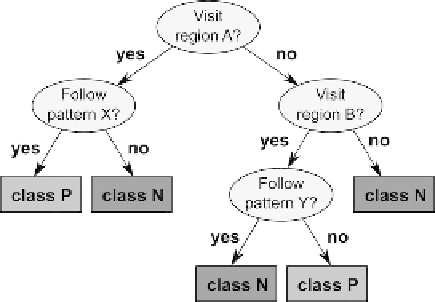

Figure 6.8 Sample decision tree on regions and patterns.

of trajectories identifiable, and the two types of patterns collaborate to better

characterize trajectories.

Once a vector of features has been computed for each trajectory, we can

choose any generic, vector-based classification algorithm. One representative

(and easy to grasp) example is

decision trees

. The resulting classification model

has the structure of a tree, whose internal nodes represent tests on the features

of the object to classify, and the leaves indicate the class to associate to the

objects. Figure

6.8

shows a fictitious example based on TraClass features, with

two classes: positive (P) and negative (N). When a new trajectory needs to be

classified, the test on the root (the top circle) is performed on it. In the example,

if the trajectory actually visits region A, then we move to the left child of the root

and continue the evaluation from there, otherwise we move to the right child.

In the first case, we have now to test whether the trajectory follows pattern X:

in case of a positive answer, the trajectory is labeled with “class P,” otherwise

with “class N.” The classification process proceeds in a similar way when

different outcomes are obtained, always starting from the root and descending

through a path till a leaf is reached, which provides the label prediction. Another

way to read a decision tree is as a set of decision rules, one for each path

from root to leaf, such as “If (Visit region A) AND (Follow patter X) THEN

Class P.”

6.3.3 Trajectory Location Prediction

Trajectory classification can be seen as the problem of predicting a categorical

variable related to a trajectory. However, prediction is most naturally related

to the temporal evolution of variables. Since the basic aspect of objects in the

context of trajectory is their location, predicting their future position appears to

be a problem of primary interest.