Information Technology Reference

In-Depth Information

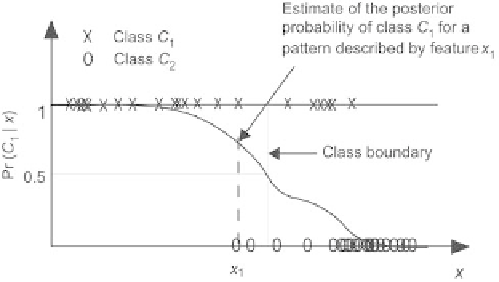

Fig. 1.26.

Estimate of the posterior probability of class

C

i

, and boundary between

classes from Bayes decision rule

classification by neural networks. A lucid and detailed description of that ap-

proach is given in C. Bishop's excellent topic [Bishop 1995].

1.3.5.2

C

-Class Problems

When the number of classes involved in a classification problem is larger than

two, two strategies can be implemented, i.e.,

•

find a global solution to the problem by simultaneously estimating the

posterior probabilities of all classes;

•

split the problem into two-class subproblems, design a set of pairwise clas-

sifiers that solve the subproblems, and combine the results of the pairwise

classifier into a single posterior probability per class.

We will consider those strategies in the following subsections.

Global Strategy

That is the most popular approach, although it is not always the most e

cient,

especially for di

cult classification tasks. For a

C

-class problem, a feedforward

neural network with

C

outputs is designed (Fig. 1.27), so that the result is

encoded in a 1-out-of-

C

code: the event “the pattern belongs to class

C

i

”is

signaled by the fact that the output vector

g

has a single nonzero component,

which is component number

i

. Similarly to the two-class case, it can be proved

that the expectation value of the components of vector

g

are the posterior

probabilities of the classes.

In neural network parlance, a

one-out-of-C

encoding is known as a

grand-

mother code

. That refers to a much-debated theory of data representation in

nervous systems, whereby some neurons are specialized for the recognition of

usual shapes, such as our grandmother's face.

Search WWH ::

Custom Search