Information Technology Reference

In-Depth Information

Fig. 1.24.

Classification achieved by Bayes classifier

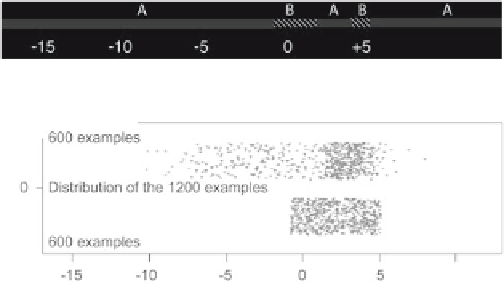

Fig. 1.25.

Examples used for estimating the misclassification rate.

Top

:class

A

;

bottom

:class

B

order to estimate the misclassification rate of the resulting Bayes classifier,

a large number of realizations of examples of each class are generated, and

the proportion of misclassified examples is computed. 600 examples of each

class were generated (Fig. 1.25), and, by simple counting, the misclassification

rate was estimated to be equal to 30.1%. Therefore, it can be claimed that no

classifier, however carefully designed, can achieve a classification performance

higher than 69.9%. The best classifiers are the classifiers that come closest to

that theoretical limit.

1.3.5 Classification and Regression

The previous section was devoted to the probabilistic foundations of classifi-

cation. We are going to show why neural networks, which are regression tools,

are relevant to classification tasks.

1.3.5.1 Two-Class Problems

We first consider a problem with two classes

C

1

and

C

2

, and an associated

random variable

Γ

, which is a function of the vector of descriptors

x

;that

random variable is equal to 1 when the pattern belongs to class

C

1

,and0

otherwise. We prove the following result: the regression function of the random

variable

Γ

is the posterior probability of class

C

1

.

The regression function

y

(

x

) of variable

Γ

is the expectation value of

Γ

given

x

:

y

(

x

)=

E

(

Γ

|

x

). In addition, one has:

E

(

Γ

|

x

)=Pr(

Γ

=1

|

x

)

×

1+Pr(

Γ

=0)

×

0=Pr(

Γ

=1

|

x

)

which proves the result.

Neural networks are powerful tools for estimating regression functions from

examples. Therefore, neural networks are powerful tools for estimating poste-

rior probabilities, as illustrated on Fig. 1.26 this is the rationale or performing

Search WWH ::

Custom Search