Information Technology Reference

In-Depth Information

1.0

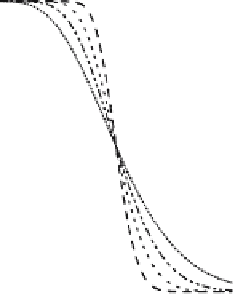

N=10

N=20

N=40

N=100

N =

∞

P(

α

)

0.5

0.0

0

1

2

3

4

α

Fig. 6.21.

Probability of linear separation of

M

points in

N

dimensions

For

N

large, the probability of linear separation is almost 1 if

M

≤

2

N

,

and drops abruptly to zero beyond

M/N

∼

2. Hence, although we cannot

guarantee that

any

training set with

M

2

N

is linearly separable, this is

highly probable; the larger

N

and

M

, the larger the probability of linear

separation when

α<

2. In the thermodynamic limit

N

≤

,

with

α ≡ M/N

= constant, the

typical capacity

of the perceptron is

α

c

=2.

Strictly speaking,

α

c

indicates the transition between the regime where linear

separability has probability 1 and the regime where that probability vanishes,

in the thermodynamic limit. It is important to emphasize that the behavior

of

P

LS

(

L

M

) is already very close to the asymptotic thermodynamic limit for

values of

N

of the order of 100. That is why typical learning properties provide

very useful hints for systems with large, but finite, dimension

N

.

→∞

,

M

→∞

6.8 Additional Theoretical Material

6.8.1 Bounds to the Number of Iterations of the Perceptron

Algorithm

In this section, we provide the computation of the bounds used to prove the

convergence of the perceptron algorithm. In order to obtain a lower bound

to the norm of the weight vector, we take into account the fact that

w

∗

is

unitary:

w

∗

.

We denote by

k

(

t

) the label of the examples learnt at iteration

t

. After iteration

t

, the weight vector

w

(

t

+ 1) can be written as

w

(

t

+1)

=

w

(

t

+1)

w

∗

≥

w

(

t

+1)

·

Search WWH ::

Custom Search