Information Technology Reference

In-Depth Information

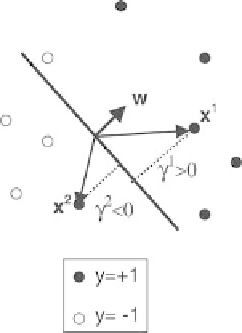

Fig. 6.6.

Input space, with two examples

x

1

and

x

2

, of class +; the hyperplane

corresponding to a perceptron of weights

w

(with

w

= 1) is shown, together with

the stabilities

γ

1

and

γ

2

of the examples

6.4 Training Algorithms for the Perceptron

There are many learning algorithms that allow to determine the perceptron

weights based on the training set

L

M

(

x

k

,y

k

)

,

k

=1to

M

. Historically,

the oldest one is the “perceptron algorithm”. Although it is seldom used in

practice, it has interesting properties. We will see that the alternative training

algorithms may be considered as generalizations of it.

=

{

}

Remark.

If the examples of the training set are linearly separable, a percep-

tron should be able to learn the classification.

6.4.1 Perceptron Algorithm

The following algorithm was proposed by Rosenblatt to train the perceptron:

Perceptron Algorithm

•

Initialization

1.

t

= 0 (counter of updates)

2. either

w

(0) = 0 (tabula rasa initialization) or each component of

w

(0)

is initialized at random.

•

Test

1.

if z

k

≡

x

k

>

0 for all examples

k

=1

,

2

,...,M

(they are

correctly learned)

then

stop

.

2.

else go to

learning

•

Learning

1. select an example

k

of the training set

L

M

, either at random or follow-

ing a pre-established order.

y

k

w

(

t

)

·

Search WWH ::

Custom Search