Information Technology Reference

In-Depth Information

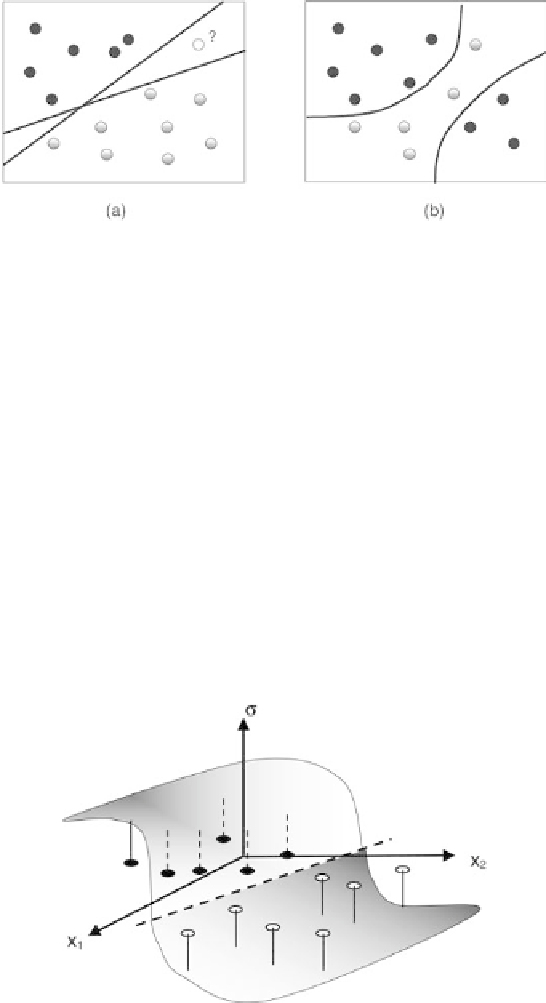

Fig. 6.1.

Examples belonging to two classes in dimension

N

= 2. The lines represent

the discriminant surfaces. (

a

) linearly separable training set, showing two separations

without training errors but giving different answers for the class of a new input

(

empty circle

). (

b

) A general case

•

if one just needs to classify the patterns

x

, the discriminant surface only is

needed. Since the classifier performs a binary function of its inputs, in this

chapter we will show how the discriminant surface may be represented us-

ing binary neurons only. That cannot be done if we transform the problem

into a regression problem;

•

if the probability that the input pattern belongs to a given class is neces-

sary, in order to make a posterior decision (such is the case for instance

when the classification is performed after comparison of the outputs of

different classifiers) we may use either continuous output units (e.g., sig-

moidal neurons), but also binary neurons. In this chapter we show that

the latter can also be assigned a probabilistic interpretation.

Fig. 6.2.

Examples in dimension 2.

Black points

stand for patterns of class +1;

white

denote those of class

−

1. The

shadowed surface

is the regression; the discriminant

surface (a line in that case) is shown as a

dotted line

Search WWH ::

Custom Search