Information Technology Reference

In-Depth Information

A current value function

Q

n

is available, which determines the exploration

policy

π

n

.

A subset

E

n

of states is randomly selected.

For each state

x

k

in

E

n

, a feasible action

π

n

(

x

k

) is selected according to

the current exploration policy

π

n

. A transition occurs to the state

y

k

.

The elementary cost

c

(

x

k

,π

n

(

x

k

)

,y

k

) is taken into account.

Then, the construction of a current element of the training set is pos-

sible, which associates to the input (

x

k

,π

n

(

x

k

)) the output

Q

k

=

c

(

x

k

,π

n

(

x

k

)

,y

k

)+min

u/

(

y

k

,u

)

∈

A

Q

n

(

y

k

,u

).

A supervised learning epoch is implemented to update the approximate

value function

Q

n

, providing a new approximation

Q

n

+1

.

The previous iteration is repeated either from the current state set

E

n

+1

=

y

k

or from a new random selection of

E

n

+1

.

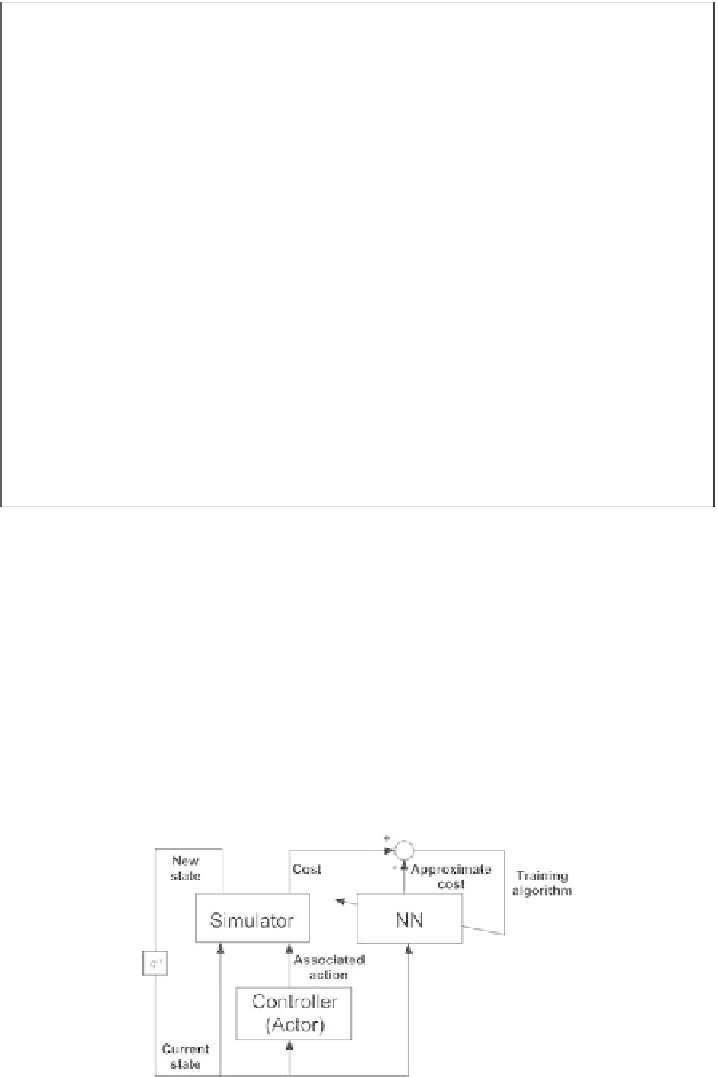

The diagram of that algorithm is shown on Fig. 5.14.

Note first there is no available general convergence proof for that algo-

rithm, which is quite similar to Q-learning. For practical purposes, the algo-

rithm is computationally economical, and the approximation is accurate if one

uses a relevant topology on the set of feasible state-action couples in order to

obtain an e

cient numerical encoding of the approximation input. The value

function must be regular with respect to that encoding in order to decrease

the time complexity of the supervised learning process.

To summarize, a good knowledge of the application context must be avail-

able, in order to compensate for the lack of generality of the algorithm. Those

Fig. 5.14.

Approximate assessment of a policy using neural network

Search WWH ::

Custom Search