Information Technology Reference

In-Depth Information

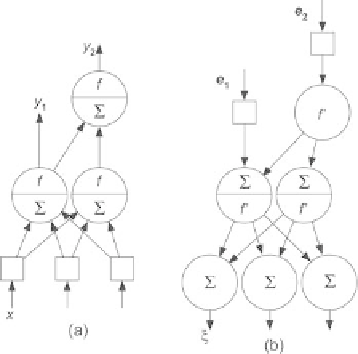

Fig. 4.20.

Adjoint of a layered feedforward network (

a

) Original network,

f

stands

for the transition operator through the nonlinear activation function (

b

) adjoint

network, notation

f

stands for the linear product by the derivative of function

f

taken at the current point of the original network

•

backpropagation of the error through the adjoint unfolded network for

k

=1to

p

,

n

+1

=

g

∗

(

ε

n

+1

,

ξ

n

+1

,

w

)

.

Figure 4.20 illustrates the construction of the adjoint network in a simple

case.

We showed on picture (a) a layered network with three inputs, a first

layer with a hidden neuron and an output neuron and a second layer with a

single output neuron. Thus, the network performs a nonlinear application of

R

3

into R

2

. On picture (b), the adjoint network performs a linear mapping

of R

2

into R

3

. The inputs of the adjoint network are the error signals that

are associated to the original network outputs. The mathematical definition

is simple: the adjoint of the nonlinear application

y

=

g

(

x

) is the linear

application

ξ

=[

Dg

(

x

)]

T

ε

,where[

Dg

(

x

)]

T

is the transposed matrix of the

Jacobian matrix of

g

at

x

, i.e., the matrix of the partial derivatives. It is

just a graphical representation of the backpropagation algorithm, which is

frequently used to compute the gradient of the cost function with respect to

the parameters.

Once the error signals of the adjoint network are computed, the compu-

tation of the quadratic error gradient is achieved through implementing the

classical backpropagation rule. However, one has to consider that the network

is an unfolded network. Actually, the network has been duplicated

p

times

where

p

is the width of the time window. Therefore, the numerical value of

one connection weight is shared by several connections that have different

locations in the unfolded network.

ξ

k−

1

Search WWH ::

Custom Search