Information Technology Reference

In-Depth Information

An e

cient procedure for computing the leverages

h

kk

is discussed in the

additional material at the end of the chapter.

In the section devoted to the rank of the Jacobian matrix, we have shown

that it is useful to know whether that matrix has full rank. It can be checked

as follows: the leverages are computed according to the procedure that is

described in the additional material. That procedure can be performed ac-

curately even if

Z

does not have full rank, and the above two relations are

checked. If they are not obeyed, then matrix

Z

does not have full rank. There-

fore, the model must be discarded.

A particularly useful result for the estimation of the generalization error

is the following: the prediction error

r

(

−k

)

k

on example

k

, when the latter is

withdrawn from the training set, can be estimated in a straightforward fashion

from the prediction error

r

k

on example

k

when the latter is in the training

set

r

k

r

(

−k

)

k

=

.

1

−

h

kk

Here again, the result is exact in the case of a linear model (see for instance

[Antoniadis et al. 1992]), and it is approximate for a nonlinear model.

A similar approach is discussed in [Hansen 1996] for models trained with

regularization.

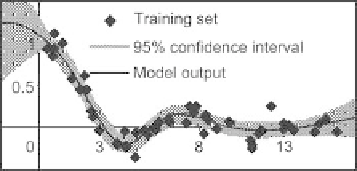

As an illustration, we describe an academic example: a set of 50 train-

ing examples is generated by adding a Gaussian noise, with zero mean and

variance 10

−

2

, to the function sin

x/x

. Figure 2.21 shows the training set and

the output of a model with two hidden neurons. A conventional leave-one-

out procedure, as described in a previous section, was carried out, providing

the values of the quantities

r

(

−k

)

k

(vertical axis of Fig. 2.22), and the previ-

ous relation was used, providing the values on the horizontal axis. All points

are nicely aligned on the bisector, thereby showing that the approximation is

quite accurate. Therefore, the virtual leave-one-out score

E

p

,

r

k

1

2

N

1

N

E

P

=

,

−

h

kk

k

=1

Fig. 2.21.

Training set, output and confidence interval on the output, for a model

with two hidden neurons

Search WWH ::

Custom Search