Database Reference

In-Depth Information

3.1 Modeling

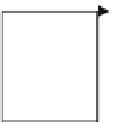

RL was based originally on methods of dynamic programming (DP, the mathemat-

ical theory of optimal control), albeit that in machine learning, the theories and

terminology have since been developed beyond DP. Central to this - as is usual in

AI - is the term

agent

. Figure

3.1

shows the interaction between agent and

environment in reinforcement learning.

The agent passes into a new

state (s

), for which it receives a

reward (r

)fromthe

environment, whereupon it decides on a new

action (a

) from the admissible

action set

for

s

(

A(s)

), by which in most cases it learns, and the environment responds in turn to

this action, etc. In such cases, we differentiate between

episodic tasks

, which come to

an end (as in a game), and

continuing tasks

without any end state (such as a service

robot which moves around indefinitely). The goal of the agent consists in selecting the

actions in each state so as to maximize the sum of all rewards over the entire episode.

The selection of the actions by the agent is referred to as its

policy

, and that policy

which results in maximizing the sum of all rewards is referred to as the

optimal policy

.

Example 3.1

As the first example for RL, we can consider a robot, which is

required to reach a destination as quickly as possible. The states are its coordinates,

the actions are the selection of the direction of travel, and the reward at every step

is

1. In order to maximize the sum of rewards over the entire episode, the robot

must achieve its goal in the fewest possible steps.

π

■

Example 3.2

A further example is chess once again, where the positions of the

pieces are the states, the moves are the actions, and the reward is always 0 except in

the final position, at which it is 1 for a win, 0 for a draw, and

1 for a loss (this is

what we call a

delayed reward

).

■

Example 3.3

A final example, to which we will dedicate more intensive study, is

recommendation engines. Here, for instance, the product detail views are the states,

the recommended products are the actions, and the purchases of the products are the

rewards.

■

Agent

reward

r

t

action

a

t

state

s

t

r

t

+1

Environment

s

t

+1

Fig. 3.1 The interaction between agent and environment in RL