Information Technology Reference

In-Depth Information

a.

01234567890123456

7890123456789012

26375471750

3456

DTQaababaabbaabba

86648

7682

W = {-1.64, -1.834, -0.295, 1.205, -0.807, 0.856, 1.702, -1.026, -0.417, -1.061}

T = {-1.14, 1.177, -1.179, -0.74, 0.393, 1.135, -0.625, 1.643, -0.029, -1.639}

m

m

D

-0.029

Q

T

-0.625

1.643

a

a

a

a

b

a

b

b.

01234567890123456

7890123456789012

2637547

3456

DTQaababaabbaabba

86648

1750

7682

W = {-1.64, -1.834, -0.295, 1.205, -0.807, 0.856, 1.702, -1.026, -0.417, -1.061}

T = {-1.14, 1.177, -1.179, -0.74, 0.393, 1.135, -0.625, 1.643, -0.029, -1.639}

d

d

D

0.393

T

Q

-0.625

-0.625

a

a

a

a

a

b

b

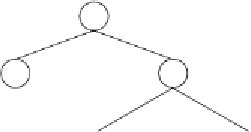

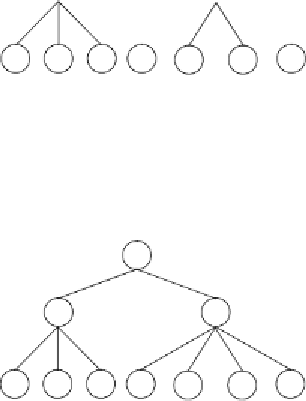

Figure 10.2.

Illustration of Dw-specific transposition.

a)

The mother neural

network.

b)

The daughter NN created by transposition. Note that the network

architecture is the same for both mother and daughter and that W

m

= W

d

and

T

m

= T

d

. However, mother and daughter are different because different combina-

tions of weights and thresholds are expressed in these individuals.

W

0,0

= {-0.78, -0.521, -1.224, 1.891, 0.554, 1.237, -0.444, 0.472, 1.012, 0.679}

W

0,1

= {-1.553, 1.425, -1.606, -0.487, 1.255, -0.253, -1.91, 1.427, -0.103, -1.625}

0123456789012345601234567890123456

T

aabbbabb291

39341QDbabbabb40396369

-[0]

Q

Tababaab552

27879QDbabbaaa36972318-[1]

W

1,0

= {-0.148, 1.83, -0.503, -1.786, 0.313, -0.302, 0.768, -0.947, 1.487, 0.075}

W

1,1

= {-0.256, -0.026, 1.874, 1.488, -0.8, -0.804, 0.039, -0.957, 0.462, 1.677}

Note that the weights of the offspring are exactly the same as the weights of

the parents. However, due to recombination, the weights expressed in the