Information Technology Reference

In-Depth Information

Furthermore, special mutation operators were created that allow the perma-

nent introduction of variation in the set of weights and thresholds.

It is worth emphasizing that the basic genetic operators such as mutation,

inversion, IS and RIS transposition, are not affected by Dw or Dt as long as

the boundaries of each region are maintained and the alphabets of each do-

main are used correctly within the confines of the respective domains. Note

also that this mixing of alphabets is not a problem for the recombinational

operators and, consequently, their port to GEP-nets is straightforward. How-

ever, these operators pose other problems that will be addressed later in the

next section.

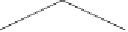

Consider, for instance, the conventionally represented neural network with

two input units (

i

1

and

i

2

), two hidden units (

h

1

and

h

2

), and one output unit

(

o

1

) (for simplicity, the thresholds are all equal to one and are omitted):

o1

5

6

h1

h2

2

3

1

4

i1

i2

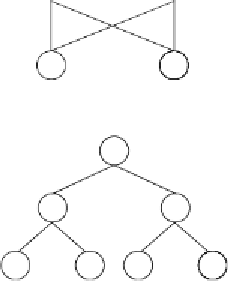

It can also be represented by a conventional tree:

D

5

6

D

D

1

2

3

4

a

b

a

b

where

a

and

b

represent, respectively, the two inputs

i

1

and

i

2

and “D” repre-

sents a function with connectivity two. This function multiplies the values of

the arguments by the respective weights and adds all the incoming activation

in order to determine the forwarded output. This output (zero or one) de-

pends on the threshold, that is, if the total incoming activation is equal to or

greater than the threshold, then the output is one, zero otherwise.

We could linearize the above NN-tree as follows:

0123456

789012

DDDabab

123456

where the structure in bold (Dw) encodes the weights. The values of each