Information Technology Reference

In-Depth Information

K

m

nk

m

k

N

z

nk

v

k

x

n

θ

k

y

n

classifiers

data

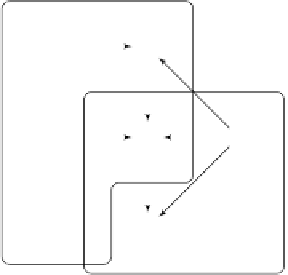

Fig. 4.3.

Directed graphical model of the generalised Mixtures-of-Experts model. See

the caption of Fig. 4.1 for instructions on how to read this graph. When compared to

the Mixtures-of-Expert model in Fig. 4.1, the latent variables

z

nk

depends additionally

on the matching random variables

m

nk

, whose values are determined by the mixing

functions

m

k

and the inputs

x

n

that is, the value of a classifier's matching function determines the probability

of that classifier matching a certain input.

To enforce matching, the probability for classifier

k

having generated obser-

vation (

x

,

y

), given by (4.4), is redefined to be

⎧

⎨

exp(

v

k

φ

(

x

))

if

m

k

=1for

x

,

p

(

z

k

=1

|

x

,

v

k

,m

k

)

∝

(4.20)

⎩

0

otherwise

,

where

φ

is a transfer function, whose purpose will be explained later and which

can for now be assumed to be the identity function,

φ

(

x

)=

x

.Thus,thediffe-

rences from the previous definition (4.4) are the additional transfer function and

the condition on

m

k

that locks the generation probability to 0 if the classifier

does not match the input. Removing the condition on

m

k

by marginalising it

out results in

g

k

(

x

)

≡

p

(

z

k

=1

|

x

,

v

k

)

∝

p

(

z

k

=1

|

x

,

v

k

,m

k

)

p

(

m

k

=

m

|

x

)

m∈{

0

,

1

}

=0+

p

(

z

k

=1

|

x

,

v

k

,m

k

)

p

(

m

k

=1

|

x

)

=

m

k

(

x

)exp(

v

k

φ

(

x

))

.

(4.21)

Adding the normalisation term, the gating network is now defined by

m

k

(

x

)exp(

v

k

φ

(

x

))

j

=1

m

j

(

x

)exp(

v

j

φ

(

x

))

g

k

(

x

)

≡

p

(

z

k

=1

|

x

,

v

k

)=

.

(4.22)

As can be seen when comparing it to (4.5), the additional layer of localisation is

specified by the matching function, which reduces the gating to

g

k

(

x

)=0ifthe

classifier does not match

x

,thatis,if

m

k

(

x

)=0.

Search WWH ::

Custom Search