Information Technology Reference

In-Depth Information

Mixed Prediction and Prediction of Classifiers

Variational Bound and Number of Classifiers

1.5

-40

7

data

pred +/- 1sd

gen. fn.

cl. 1

cl. 2

L(q)

K

-60

6

1

-80

5

0.5

-100

4

0

-120

3

-140

-0.5

2

-160

-1

1

-180

-1.5

-200

0

-1

-0.5

0

0.5

1

0

1000

2000

3000

4000

5000

Input x

MCMC step

(a)

(b)

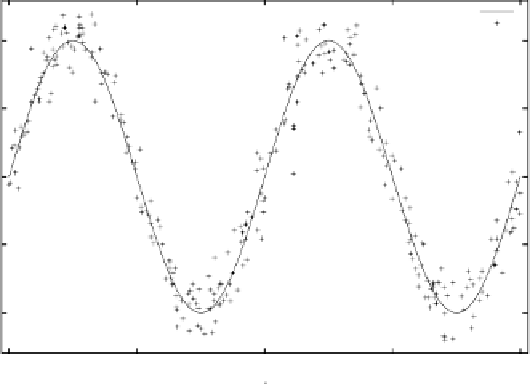

Fig. 8.12.

Plots similar to the ones in Fig. 8.6, where MCMC model structure search

was applied to a function with variable noise. The best discovered model structure is

given by

l

1

=

−

0

.

98

,u

1

=

−

0

.

06 and

l

2

=0

.

08

,u

2

=0

.

80.

Noisy Sinusoid and Available Data

f(x) mean

data

1

0.5

0

-0.5

-1

-1

-0.5

0

0.5

1

Input x

Fig. 8.13.

Plot showing the mean of the noisy sinusoidal function, and the 300 obser-

vations that are available from this function

more complex function. The used function is the noisy sinusoid given over the

range

(0

,

0

.

15), as shown in Fig. 8.13. Soft

interval matching is again used to clearly specify the area of the input space that

a classifier models. The data set is given by 300 samples from

f

(

x

).

Both GA and MCMC search are initialised as before, with the number of

classifiers sampled from

−

1

≤

x

≤

1by

f

(

x

)=sin(2

πx

)+

N

B

(8

,

0

.

5). The GA search identified 7 classifiers with

L

155

.

68, as shown in Fig. 8.14. It is apparent that the model

can be improved by reducing the number of classifiers to 5 and moving them to

(

q

)+ln

K

!

≈−

Search WWH ::

Custom Search