Information Technology Reference

In-Depth Information

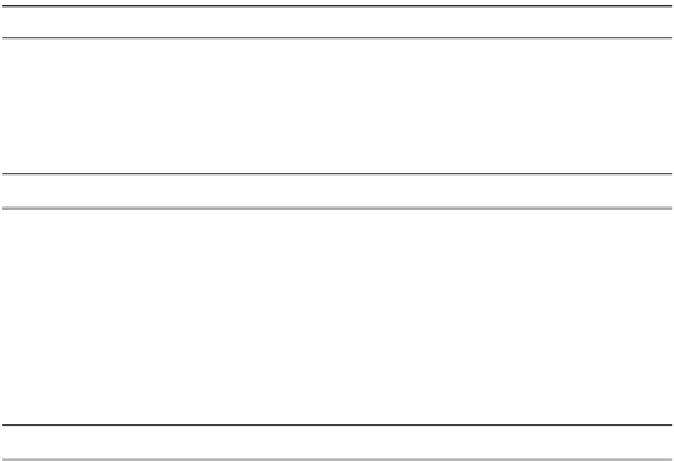

Table 7.1.

Bayesian

LCS model, with all its components. For more details on the

model see Sect. 7.2.

Data, Model Structure, and Likelihood

N

observations

{

(

x

n

,

y

n

)

}

,

x

n

∈X

=

R

D

X

,

y

n

∈Y

=

R

D

Y

Model structure

M

=

{K,

M

},k

=1

,...,K

K

classifiers

Matching functions

M

=

{m

k

:

X→

[0

,

1]

}

Likelihood

p

(

Y

|

X

,

W

,

τ

,

Z

)=

Q

n

=1

Q

k

=1

p

(

y

n

|

x

n

,

W

k

,τ

k

)

z

nk

Classifiers

Variables

Weight matrices

W

=

{

W

k

},

W

k

∈ R

D

Y

× R

D

X

Noise precisions

τ

=

{τ

k

}

Weight shrinkage priors

α

=

{α

k

}

Noise precision prior parameters

a

τ

,

b

τ

α

-hyperprior parameters

a

α

,

b

α

I

)=

Q

D

Y

j

p

(

y

|

x

,

W

k

,τ

k

)=

N

(

y

|

W

k

x

,τ

−

1

k

N

(

y

j

|

w

kj

x

,τ

−

1

Model

)

=1

k

p

(

W

k

,τ

k

|α

k

)=

Q

D

Y

j

=1

`

N

(

w

kj

|

0

,

(

α

k

τ

k

)

−

1

I

)Gam(

τ

k

|a

τ

,b

τ

)

´

p

(

α

k

)=Gam(

α

k

|a

α

,b

α

)

Priors

Mixing

Latent variables

Z

=

{

z

n

}

,

z

n

=(

z

n

1

,...,z

nK

)

T

∈{

0

,

1

}

K

, 1-of-

K

Mixing weight vectors

V

=

{

v

k

}

,

v

k

∈ R

D

V

Mixing weight shrinkage priors

β

=

{β

k

}

β

-hyperprior parameters

a

β

,

b

β

Variables

p

(

Z

|

X

,

V

,

M

)=

Q

n

=1

Q

k

=1

g

k

(

x

n

)

z

nk

g

k

(

x

)

≡ p

(

z

k

=1

|

x

,

v

k

,m

k

)=

Model

v

k

φ

))

P

j

=1

m

j

(

x

)exp(

v

j

φ

(

x

))

m

k

(

x

)exp(

(

x

p

(

v

k

|β

k

)=

N

(

v

k

|

0

,β

−

1

k

Priors

I

)

p

(

β

k

)=Gam(

β

k

|a

β

,b

β

)

7.2.1

Data, Model Structure, and Likelihood

To evaluate the evidence of a certain model structure

M

, the data

D

and the

model structure

consists of

N

observations,

each given by an input/output pair (

x

n

,

y

n

). The input vector

x

n

is an element

of the

D

X

-dimensional real input space

M

need to be known. The data

D

D

X

, and the output vector

y

n

is

an element of the

D

Y

-dimensional real output space

X

=

R

D

Y

. Hence,

x

n

has

D

X

,and

y

n

has

D

Y

elements. The input matrix

X

and output matrix

Y

are

defined according to (3.4).

The data is assumed to be standardised by a linear transformation such that

all

x

and

y

have mean

0

and a range of 1. The purpose of this standardisation

is the same as the one given by Chipman, George and McCulloch [62], which is

to make it easier to intuitively gauge parameter values. For example, with the

data being standardised, a weight value of 2 can be considered large as a half

range increase in

x

would result in a full range increase in

y

.

Y

=

R

Search WWH ::

Custom Search