Information Technology Reference

In-Depth Information

)=

θ

p

(

D|M

p

(

D|

θ

,

M

)

p

(

θ

|M

)d

θ

,

(7.2)

where

p

(

D|

θ

,

M

) is the data likelihood for a given model structure

M

,and

p

(

θ

) are the parameter priors given the same model structure. Thus, in order

to perform Bayesian model selection, one needs to have a prior over the model

structure space

|M

, a prior over the parameters given a model structure, and

an ecient way of computing the model evidence (7.2).

As expected from a good model selection method, an implicit property of

Bayesian model selection is that it penalises overly complex models [159]. This

can be intuitively explained as follows: probability distributions that are more

widely spread generally have lower peaks as the area underneath their density

function is always 1. While simple model structures only have a limited capability

of expressing data sets, more complex model structures are able to express a

wider range of different data sets. Thus, their prior distribution will be more

widely spread. As a consequence, conditioning a simple model structure on some

data that it can express will cause its distribution to have a larger peak than a

more complex model structure than is also able to express this data. This shows

that, in cases where a simple model structure is able to explain the same data

as a more complex model structure, Bayesian model selection will prefer the

simpler model structure.

{M}

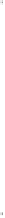

Example 7.1 (Bayesian Model Selection Applied to Polynomials).

As in Exam-

ple 3.1, consider a set of 100 observation from the 2nd degree polynomial

f

(

x

)=

1

/

3

x/

2+

x

2

with additive Gaussian noise

(0

,

0

.

1

2

) over the range

x

[0

,

1].

Assuming ignorance of the data-generating process, the acquired model is a po-

lynomial of unknown degree

d

. As was shown in Example 3.1, minimising the

empirical risk leads to overfitting, as increasing the degree of the polynomial and

with it the model complexity reduces this risk. Minimising the expected risk, on

the other hands leads to correctly identifying the “true” model, but this risk is

−

N

∈

0.02

75

Empirical Risk

Expected Risk

L(q)

70

0.015

65

0.01

60

0.005

55

0

50

0

1

2

3

4

5

6

7

8

9

10

0

1

2

3

4

5

6

7

8

9

10

Degree of Polynomial

Degree of Polynomial

(a)

(b)

Fig. 7.1.

Expected and empirical risk, and the variational bound of the fit of polyno-

mials of various degree to 100 noisy observations of a 2nd-order polynomial. (a) shows

how the expected and empirical risk change with the degree of the polynomial. (b)

shows the same for the variational bound. More information is given in Example 7.1.

Search WWH ::

Custom Search