Information Technology Reference

In-Depth Information

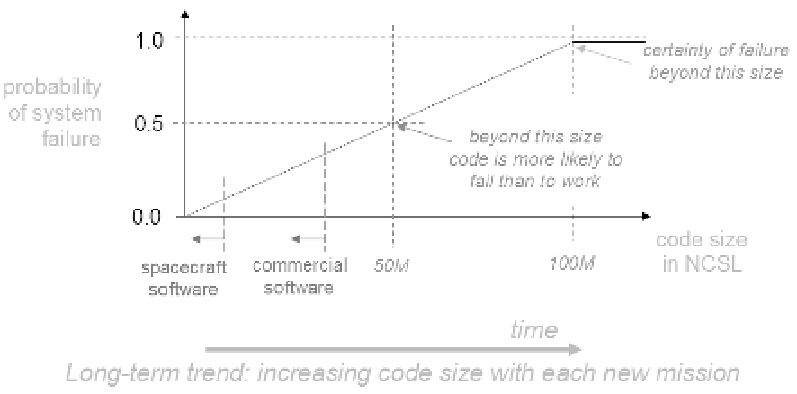

to the quality measures employed, which will most likely cause a “mission

failure”, according to figure 6. That means, by applying a certain set of quality

assurance measures and due to the then reachable bug-density, it does not

make sense to start a mission, if the code size has exceeded that particular

limit, simply because the failure of the mission would be almost certain.

NASA is doing that reasoning usually for a single spacecraft per mission,

representing a very high value and involving, at the most, very few human

lives.

Fig. 6. Graph taken from a NASA “Study on Flight Software Complexity” [12]

suggesting a reasonable limit for software size, determine a level, which results in

“certainty of failure beyond this size” (NCSL: Non-Commentary Source Lines =

LOC as used in this paper).

Large railway operators in the opposite may have several hundreds of

trains, representing the same level of material value (but carrying hundreds

of passengers) operating at the same time. Assuming that a mission critical

failure in software of a particular size may show up once in 1000 years, would

mean for a space mission duration of 1 year, a probability for a mission failure

of about 0,1% due to software (equal distribution assumed). For the railway

operator however, who operates 1000 trains at the same time, having the

same size and quality of software on board may cause a “mission failure”

event about once a year, making drastically clear that code size matters.

While the third point is very dicult to get implemented within con-

ventional “closed source” software projects, simply because highly qualified

review resources are always very limited in commercial projects. Therefore

errors are often diagnosed at a late stage in the process. Their removal is

expensive and time consuming. That is why big software projects often fail

due to schedule overruns and cost levels out of control and often even been

abandoned altogether.