Information Technology Reference

In-Depth Information

Algorithm 7.11

Decision tree generating algorithm GSD

Input: Training set

Output: Decision tree, rule set

1. Select standard CR from Gain, Gain Ratio and ASF;

2. If all data items belong to one class, then decision tree is a leaf labeled with the class

sign;

3. Else, use optimum test attribute to divide data items into subset;

4. Recursively call step 2

、

3 to generate a decision tree for each subset;

5. Generate decision tree and transform to rule set.

7.8.9

Experiment results

BSDT algorithm uses following dataset (Table 7.3).

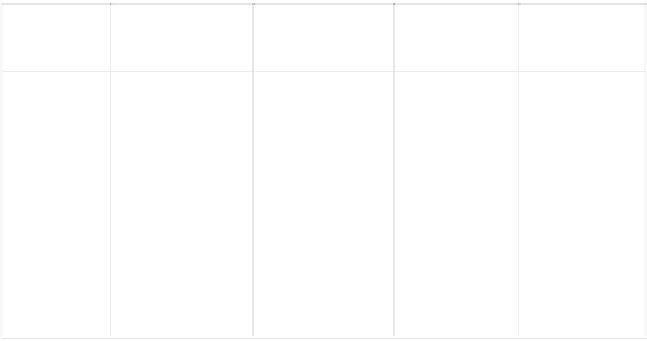

Table 7.3 Experiment dataset

#example

number of test

set

#training example

number

Dataset

#attribute number

#class number

anneal

breast-cancer

credit

genetics

glass

heart

hypo

letter

sonar

soybean

voting

diabetes

898

699

490

3,190

214

1,395

2,514

15,000

208

683

300

768

38

10

15

60

9

16

29

16

60

35

16

8

5

2

2

3

6

2

5

26

2

19

2

2

-

-

200

-

-

-

1,258

5,000

-

-

135

-

Parts of the dataset come from UCI test database (ftp://ics.uci.edu/pub/

machine-learning-database). Following measure is used to test dataset.

Using random selecting approach to divide dataset DS without given dataset

(such as: anneal, breast-cancer, etc) into test set TS and learning set LS, and let

TS = DS×10%;