Information Technology Reference

In-Depth Information

1 2

4

4

8

8

H

(

X

/

H u m i d

)

=

(

−

l o g

−

l o g

)

2 4

1 2

1 2

1 2

1 2

1 2

4

4

8

8

+

(

−

l o g

−

l o g

)

=

0 . 9 1 8 3

2 4

1 2

1 2

1 2

1 2

If Windy is selected as test attribute, then:

8

4

4

4

4

6

3

3

3

3

H

(

X

/

Windy

)

=

(

−

log

−

log

)

+

(

−

log

−

log

)

24

8

8

8

8

24

6

6

6

6

10

5

5

5

5

+

(

−

log

−

log

)

=

1

24

10

10

10

10

We can see that

/Outlook) is minimum which means that information

about Outlook provides great help for classification, providing largest amount of

information, i.e.

H

(

X

, Outlook) is maximum. Therefore, Outlook should be

selected as test attribute. We can see

I

(

X

, Windy) = 0.

Information about Windy cannot provide any information about classification.

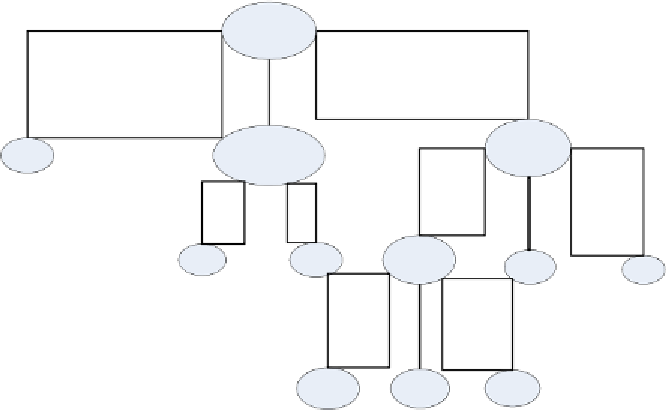

After select Outlook as test attribute, training example set is divided into three

subsets, generating three leaf nodes. Using above procedure to each leaf node in

order, we can get decision tree as shown in Figure 7.7.

H

(

X

)=

H

(

X

/Windy

, i.e.

I

(

X

Outlook

Rain

Suny

overcast

Windy

P

Humidity

Very

Not

High

Normal

Medium

N

P

Temp

N

N

Hot

Cool

Mild

N

N

P

Figure 7.7. Decision tree generated by training table 7.2

ID3 algorithms have extensive application. The most famous is C4.5(Quinlan,

1993). New function of C4.5 is that it can transform decision tree into equivalent