Information Technology Reference

In-Depth Information

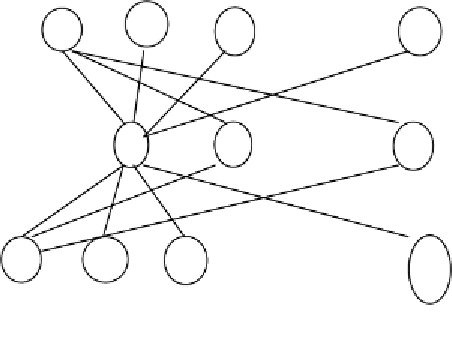

themes. Accordingly, we proposed the Bayesian latent semantic model for

document generation.

Let document set be

D

= {

d

1

, d

2

, …, d

n

}, and word set be

W

= {

w

1

, w

2

, …,

w

m

}. The generation model for document

d

∈

D

can be expressed as follows:

(1) Choose document

d

at the probability of

P

(

d

);

(2) Choose a latent theme

z

, which has the prior knowledge

p

(

z|

ȶ

);

(3) Denote the probability that theme

z

contains document d by

p

(

z|d,

ȶ

)

(4) Denote the probability of word

w

∈

W

under the theme

z

by

p

(

w| z,

ȶ

)

After above process, we get the observed pair (d

, w

). The latent theme

z

is

omitted, and joint probability model is generated:

p d w

(

,

)

=

p d p w d

(

)

(

|

)

(6.44)

∈

p

(

w

|

d

)

=

p

(

w

|

z

,

θ

)

p

(

z

|

d

,

θ

)

(6.45)

z

Z

This model is a hybrid probabilistic model under the following independence

assumptions:

(1) The generation of each observed pair (

d, w

) is relative independent, and they

are related via latent themes.

(2) The generation of word

w

is independent of any concrete document

d

. It only

depends on latent theme variable

.

Formula (6.45) indicates that in some document

z

, the distribution of word w

is the convex combination of latent themes. The weight of a theme in the

combination is the probability, at which document d belongs to the theme. Figure

6.3 illustrates the relationships between factors in the model.

d

d

d

d

...

d

2

3

1

n

z

z

...

z

1

2

k

w

w

w

...

w

1

2

3

m

Figure 6.3. Bayesian latent semantic model.

According to Bayesian formula, we substitute formula (6.45) into formula (6.44)

and get: