Information Technology Reference

In-Depth Information

- spatio-temporal partitioning, that is extraction of meaningful objects from video

in order to detect events of interest related to objects or to realize object-based

queries on video databases [14].

In the first case, the key feature to be extracted from video is represented by shot

boundaries which are considered as a “mid-level” semantic feature compared to

high level concepts appealing to human-like interpretation of video content. A shot

is a sequence of frames corresponding to one take of the camera. Very vast literature

has been devoted to the problem of shot boundary detection in the past. This was

the subject of TRECVid competition [15] in 2001-2007 and various industrial solu-

tions have come from this intensive research. Shot boundaries are the most natural

metadata which can be automatically generated and allow for a sequential naviga-

tion in a coded stream. Scene boundaries correspond to the changes of the content

with more semantic interpretation, they delimit groups of subsequent shots which

convey the same editing ideas. In the framework of scalable indexing of HD video

we wish to analyze a new trend for efficient video services: embedded indexing in

Scalable Video coding. This is namely one of the focuses of JPSearch initiative [16]:

embedding of metadata into the data encoded in the JPEG2000 standard. Hence the

latest research works link content encoding and indexing in the same framework be

it images or videos [3].

2.2.1

Embedded Mid Level Indexing in a Scalable Video Coding

The coding scheme [3] inspired by JPEG2000 scalable architecture allows a joint

encoding of a video stream and its temporal indexing such as shot boundaries in-

formation and key pictures at various quality levels. The proposed architecture of

scalable code-stream consists of a sequences of Groups of Pictures (GOPs) corre-

sponding to a video shot and containing its proper source model which at the same

time represents a content descriptor.

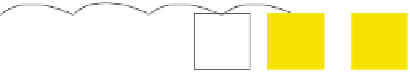

The video stream architecture is depicted in Figure 4. The authors propose using

Vector Quantization (VQ) to build a visual code-book

VC

for the first key-picture

of each shot. Here the encoding follows a traditional scheme in VQ coding: the

key-picture is split into blocks forming vectors in the description space. Then an

accelerated K-means algorithm is applied resulting in a VC of pre-defined dimen-

sion. The key-picture is then encoded with this code-book and the error of vector

quantization is encoded using a JPEG2000 encoder. For all following key-pictures,

the code-book obtained is applied for their encoding. If the coding distortion for a

given key-picture

I

ij

in the shot

S

i

encoded with

VC

i

is higher than a pre-defined

Fig. 4

Temporal decompo-

sition of a video stream

Key-

picture

Key-

picture

Key-

picture

B

1

picture

B

0

picture

B

1

picture

…

GOP

n

GOP

i-1

GOP

i