Information Technology Reference

In-Depth Information

1.25

OJA

1.2

1.15

OJAn

LUO

1.1

MCA EXIN

1.05

OJA

+

1

0

2000

4000 6000

Iterations

8000

10000

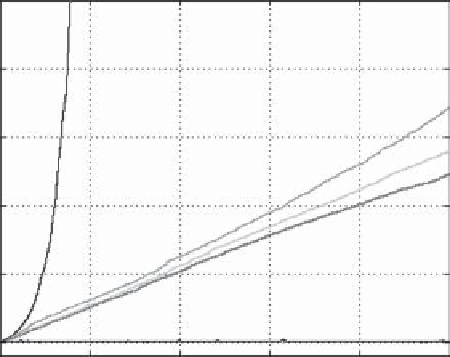

Figure 2.7

Divergences (represented by the evolution of the squared weight modulus) of

the MCA linear neurons with initial conditions equal to the solution. (

See insert for color

representation of the figure

.)

corresponding to

λ

n

=

0

.

1235

<

1. Figure 2.7 [181] shows the different diver-

gences of

w(

t

)

2

2

: OJA yields the worst result (sudden divergence), MCA EXIN

has the slowest divergence, and OJA

+

converges. Figure 2.8 shows the deviation

from the MC direction for LUO. The effect of the orthogonal weight increments

and the start of the sudden divergence are apparent. Figure 2.9 shows the good

approximation of the ODE (2.106) with respect to the LUO stochastic law and

the good estimation of

t

∞

by eq. (2.111).

2.6.2.5 Conclusions

Except for FENG, whose limits are very serious and

will be analyzed later, all other MCA neurons diverge and some of them are

plagued with sudden divergence.

Proposition 69 (MCA Divergence)

LUO and OJA diverge at a finite time

(

sud-

den divergence

)

.OJA

behaves as OJA for noisy input data. OJAn and MCA

EXIN diverge at infinite time, but the former at a faster rate.

+

Proposition 70 (Noisy Input Data)

Noisy input data (i.e., high

λ

n

), worsen

the neuron features. LUO and OJA diverge before the rate of divergence of OJAn

[

which depends exponentially on

λ

n

; see eq.

(

2.107

)]

, which increases more than

that of MCA EXIN

[

which depends on

√

λ

n

; see eq.

(

2.112

)]

. OJAn behaves as

OJA and then diverges at finite time.

In the next section we analyze the problems caused by the divergence.

Search WWH ::

Custom Search