Information Technology Reference

In-Depth Information

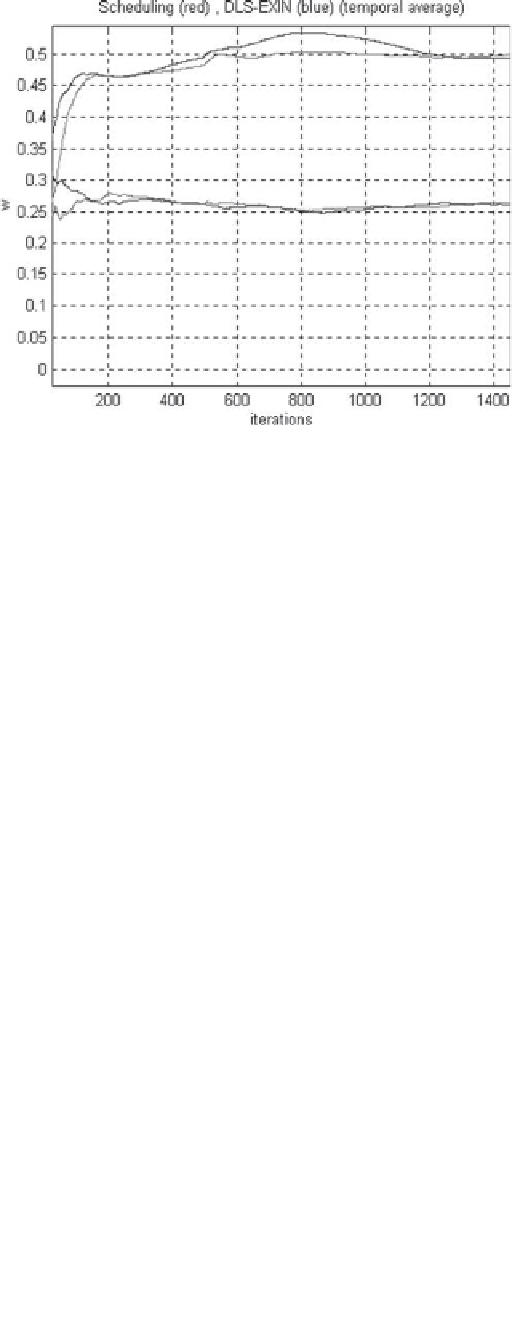

Figure 5.21

Line fitting without preprocessing for a noise variance of 0.5: transient analysis.

The values are averaged using a temporal mask with width equal to the number of iterations

up to a maximum of 500. (

See insert for color representation of the figure

.)

(i.e., the Rayleigh quotient of

A

T

A

). Recalling that the neuron is fed with the

rows of the matrix

A

and that the autocorrelation matrix

R

of the input data is

equivalent to

A

T

A

/

m

, it follows that the DLS neural problem is equivalent to an

MCA neural problem with the same inputs. This equivalence is only possible for

DLS EXIN and MCA EXIN because both neurons use the

exact

error gradient

in their learning law.

Proposition 126 (DLS-MCA Equivalence)

The DLS EXIN neuron fed by the

rows of a matrix A and with null target is equivalent to the MCA EXIN neuron

with the same input. Both neurons find the minor component of A

T

A

(

i.e., the right

singular vector associated with the smallest singular value of A

)

. The drawback

of the equivalence is the fact that instead of MCA EXIN, which always converges,

DLS EXIN is not guaranteed to converge.

This equivalence is also easily proved by taking the DLS EXIN learning law

and corresponding ODE [(5.10) and (5.12) for

ζ

=

0] and setting

b

=

0; it yields

the MCA EXIN learning law and corresponding ODE [eqs. (2.35) and (2.33).

As a consequence of the equivalence, the DLS scheduling EXIN can be used to

improve the MCA EXIN; for this purpose, it is called

MCA EXIN

+

.

Definition 127 (MCA EXIN

+

)

The MCA EXIN

+

is a linear neuron with a

DLS scheduling EXIN learning law, the same inputs of the MCA EXIN, and a null

target.

Search WWH ::

Custom Search