Information Technology Reference

In-Depth Information

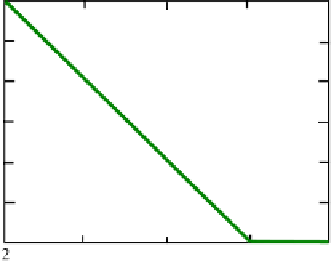

Fig. 3.7

Hinge loss for

Ranking SVM

and the corresponding ground truth label

y

(i)

u,v

, the mathematical formulation of

Ranking SVM is as shown below, where a linear scoring function is used, i.e.,

f(x)

=

w

T

x

,

n

min

1

2

ξ

(i)

2

w

+

λ

u,v

i

=

1

y

(i)

u,v

u,v

:

=

1

w

T

x

(i)

u

x

(i

v

≥

ξ

(i)

u,v

,

if

y

(i)

s.t.

−

1

−

u,v

=

1

,

(3.17)

ξ

(i)

u,v

≥

0

,i

=

1

,...,n.

As we can see, the objective function in Ranking SVM is very similar to that in

SVM, where the term

2

controls the complexity of the model

w

. The differ-

ence between Ranking SVM and SVM lies in the constraints, which are constructed

from document pairs. The loss function in Ranking SVM is a hinge loss defined on

document pairs. For example, for a training query

q

, if document

x

u

is labeled as

being more relevant than document

x

v

(mathematically,

y

u,v

=

1

2

w

1), then if

w

T

x

u

is

larger than

w

T

x

v

by a margin of 1, there is no loss. Otherwise, the loss will be

ξ

u,v

.

Such a hinge loss is an upper bound of the pairwise 0-1 loss. The curve of the hinge

loss is plotted in Fig.

3.7

.

Since Ranking SVM is well rooted in the framework of SVM, it inherits nice

properties of SVM. For example, with the help of margin maximization, Rank-

ing SVM can have a good generalization ability. Kernel tricks can also be applied

to Ranking SVM, so as to handle complex non-linear problems. Furthermore, re-

cently, several fast implementations of Ranking SVM have also been developed,

such as [

11

] and [

24

]. The corresponding tools have also been available online.

3

3

See

http://olivier.chapelle.cc/primal/

and

http://www.cs.cornell.edu/People/tj/svm_light/svm_

rank.html

.