Information Technology Reference

In-Depth Information

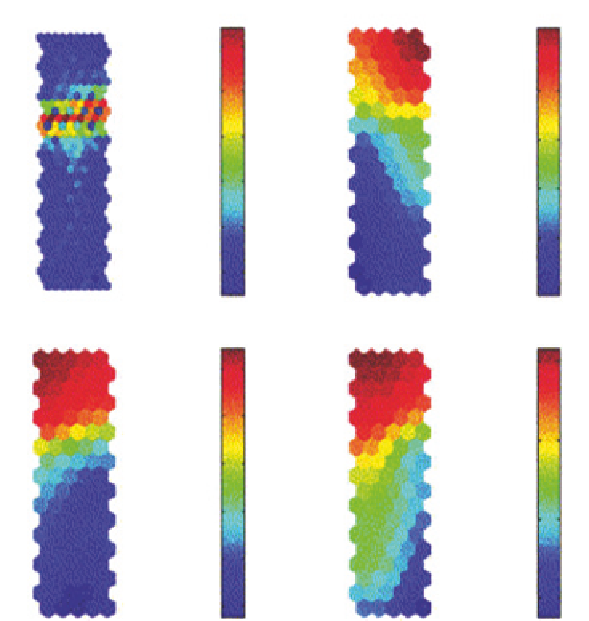

U-matrix and SOMs of three-dimensional data set

(reprinted and adapted from Rantanen et al., 2001;

with permission from Elsevier)

Figure 5.15

In this way, the SOM is partially organized from the beginning, and

narrower neighborhood functions and smaller learning-rate factors can be

selected (Kohonen, 1998). The SOM algorithm is therefore sometimes

referred to as a nonlinear adaptation of PCA (Ritter, 1995). The time it

takes to build the SOM, that is, the time when convergence occurs,

depends on the data being analyzed and parameters selected for SOM

construction. Most software programs that are available for application

of the SOMs give the possibility of automatic selection of training

parameters.

SOMs are less susceptible to noise in the data when compared to

other clustering techniques, such as hierarchical clustering (Mangiameli

et al., 1996) or k-means clustering (Chen et al., 2002). Some authors

emphasize the advantage of SOMs (as an unsupervised learning technique)

Search WWH ::

Custom Search