Information Technology Reference

In-Depth Information

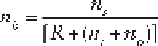

Jadid and Fairbairn (1996) proposed an upper limit of number of

hidden units, using the following equation:

[5.9]

where

R

is a constant with values ranging from 5 to 10.

A smaller number of neurons in the hidden layer may reduce the

resolution ability, whereas a larger number consumes considerable time

during the training phase. The recommended number is somewhere

between the number of the input neurons and double their number, unless

the number of the input parameters is small (Ichikawa, 2003).

Kolmogorov's theorem states that twice the number of input variables

plus one is enough hidden nodes to compute any arbitrary continuous

function (Sun et al., 2003). However, the number of neurons in hidden

layer(s) is usually optimized during development of the neural network.

Many ANN models consist of only one hidden layer and are proven to

give accurate predictions. Overfi tting of the network or memorization of

the training data can often occur when the number of hidden layers and

neurons is too high.

Some of the most frequently used types of neural networks are

described in more detail below. ANNs are often used when conventional

statistical classifi cation and/or modeling techniques do not provide

satisfactory results. It is therefore important to compare some of the most

frequently used terms in both statistic and neural networks (Table 5.1).

Equivalent terms in statistics and neural networks

(Orr, 1996)

Table 5.1

Statistics

Neural networks

model

network

estimation

learning

regression

supervised learning

interpolation

generalization

observations

training set

parameters

(synaptic) weights

independent variables

inputs

dependent variables

outputs

ridge regression

weight decay

Search WWH ::

Custom Search