Information Technology Reference

In-Depth Information

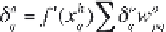

[5.7]

f

ʹ are derivative functions of the activation functions, therefore the BP

algorithm can only be applied to networks with differentiable activation

functions.

Note that when the BP algorithm is applied, the signal is transferred

backwards - from the output to hidden layers, in contrast to feed-forward

transition of signals (from input to output via hidden layers). The most

frequently used ANN is the multi-layered perceptron feed-forward

network with the BP learning algorithm. The main problem in the process

of ANNs training is the possibility that the network will get stuck in the

local minima, and several techniques have been developed to resolve this

issue (Erb, 1993).

Various other techniques, apart from the BP algorithm, are available

for the weights adjustment during the training process (i.e. error

minimization), such as steepest descent method, conjugate gradient

method, simulated annealing algorithm, Newton's method, Gauss-

Newton method, etc. (Peh et al., 2000; Reis et al., 2004). The conjugate

gradient method is often combined with the simulated annealing

algorithm to attain a global minimum among local minima (Peh et al.,

2000). Genetic algorithms (GAs) can also be used to search for the global

error minimum.

It is often diffi cult to determine the optimal number of units (neurons)

in the hidden layer(s). The number of neurons in the input layer

corresponds to the number of independent variables, whereas the number

of neurons in the output layer equals the number of dependent variables.

The number of neurons in hidden layers depends on many factors, such

as complexity of the problem studied, the number of training data, type

of activation function, training algorithm, required prediction accuracy,

etc. (Sun et al., 2003). An approach was introduced by Carpenter and

Hoffman (1995), relating the number of units in the input, hidden, and

output layers:

n

s

= β[

n

h

(

n

i

+ 1) +

n

o

(

n

h

+ 1)]

[5.8]

where

n

h

is the number of hidden units,

n

i

is the number of input units,

n

o

is the number of output units, and

n

s

is the number of training data pairs.

The constant β is the parameter relating to the degree of over- determination

(Takayama et al., 2003). When the values of β are greater than 1,

reasonable predictions can be expected, since the number of training data

pairs is greater than the number of weights that need to be adjusted in the

neural network.

Search WWH ::

Custom Search