Information Technology Reference

In-Depth Information

Hidden

Layer

Input

Layer

connection

between units

Output

Layer

W

Computing unit

W

Weight on

connection

B

Bias of transfer

function

B

Direction of information

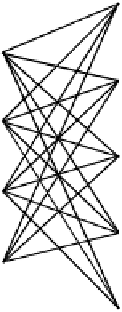

Figure 1.

Example of a feed-forward neural network, showing the connections

between the computing units. The network consists of an input layer, one hidden

layer and an output layer. In this example one weight is set on the upper right

connection and a bias is put into the transfer function of the lowest computing unit

of the hidden layer.

example of a "feed forward" neural network can be seen in figure 1. It is called feed

forward because the direction of all the connections is forward.

An important feature of neural networks is their ability to learn from experimental

data. Neural networks can be trained on experimental data in order to make predictions

about the future. By changing weights, constants and biases the output of the network can

be influenced. The weights and biases can be changed by the learning rules of the neural

network. These learning rules, which are applied to attain learning are various and are

another distinguishing feature of different neural networks.

An example of such an error function, the summed square error, is defined as:

>

@

¦

2

E

o

t

sum

squared

x

x

x

In this equation the square of the error between the output (O) and target (t) for each

of the elements x from the training set is added, resulting in the summed square error