Database Reference

In-Depth Information

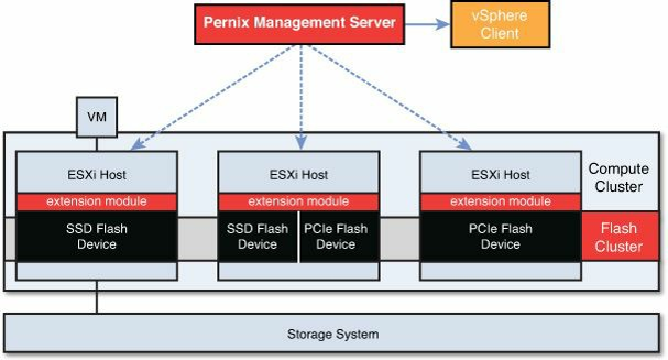

aggregates server-side flash devices across an entire enterprise to create a scale-out

data tier for the acceleration of primary storage. PernixData FVP optimizes both reads

and writes at the host level, reducing application latency from milliseconds to

microseconds. The write cache policy in this case can be write back, not just write

through. When the write back cache policy is used, the writes are replicated

simultaneously to an alternate host to ensure persistence and redundancy in the case of a

flash device or host failure.

Application performance improvements are achieved completely independent of storage

capacity. This gives virtual administrators greater control over how they manage

application performance. Performance acceleration is possible in a seamless manner

without requiring any changes to applications, workflows, or storage infrastructure.

Figure 6.43

shows a high-level overview of the PernixData Flash Virtualization

Platform architecture.

Figure 6.43

PernixData FVP architecture overview.

The flash devices in each ESXi host are virtualized by FVP, abstracted and pooled

across the entire flash cluster. As a result, you can have flash devices of differing types

and sizes in different hosts. Ideally though, you will have a homogenous configuration to

produce more uniform performance acceleration. Hosts that don't have local flash

devices can still participate in the flash cluster and benefit from read IO acceleration.

This is termed a “non-uniform configuration,” when some hosts have local flash devices

and some don't.

In the case of a non-uniform flash cluster configuration, when a VM on a host without a

flash device issues a read operation of data already present in the flash cluster, FVP

will fetch the data from the previous source host and send it to the virtual machine.