Database Reference

In-Depth Information

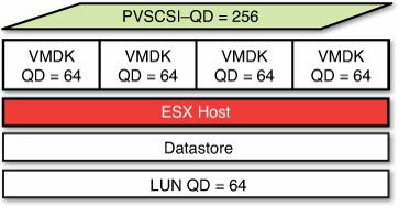

VMDKs from a single VM on the single vSphere host. Each VMDK would be able to

issue on average 16 outstanding IOs, while if the other VMDKs are idle an individual

VMDK will be able to fill the entire queue.

Figure 6.26

Four VMDK per data store.

This is quite possibly fine for a single host and a single VM for this data store. But a

data store is shared between all hosts in the cluster. If we only host a single VM on the

data store and only on a single host, we are not able to utilize all of the queue depth that

is usually available at the storage array. This assumes that the physical LUN

configuration can support a higher aggregate queue depth and higher IOPS at the storage

array level. If your backend storage is already performance constrained by its

configuration, adding more queue depth and more VMs and VMDKs to the data store

will only serve to increase latencies and IO service times.

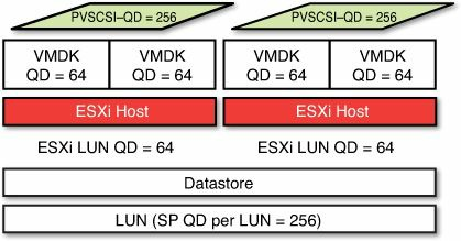

Figure 6.27

shows two SQL VMs on two different ESXi hosts accessing the same data

store. In this scenario, because each host has a LUN queue depth of 64, the combined

queue depth to the LUN at the storage array could be up to 128. Provided the LUN can

support the additional queue depth and IOPS without increasing latency, this would

allow us to extract more performance from the same LUN, while reducing the number of

LUNs that need to be managed. For this reason, sharing data stores between multiple

VMs and VMDKs across multiple hosts can produce more optimal performance than

alternatives. But it is important to make sure that each VM gets a fair share of the

performance resources of the data store.