Information Technology Reference

In-Depth Information

1

Strong performance

Weak performance

Random performance

Inverted performance

0

1

False positive rate

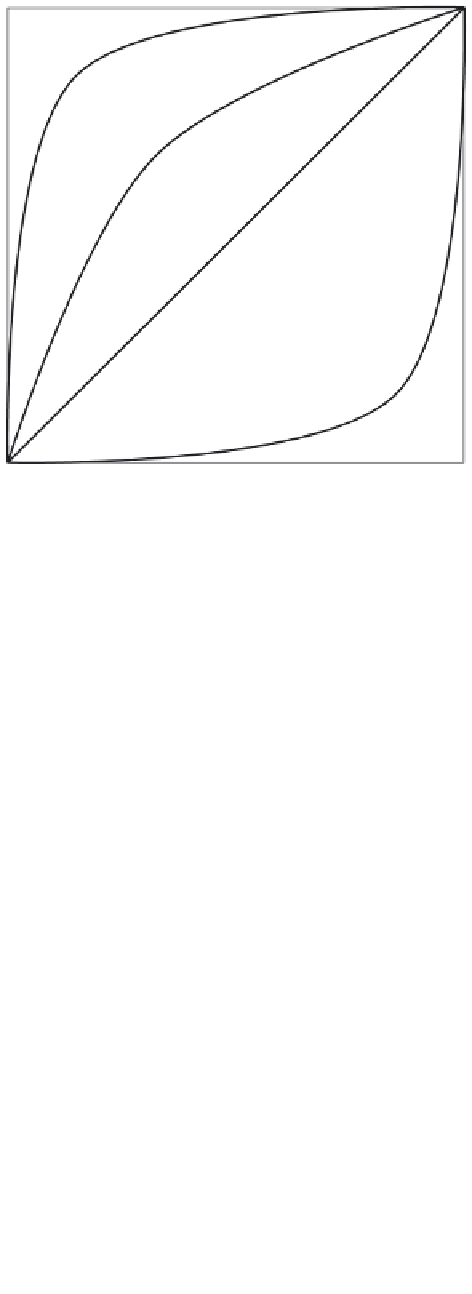

Figure 3.2

Examples of ROC curves. Each curve represents the performance of a dif-

ferent classifier on a dataset.

a point in the ROC space at

(

0

,

1

)

, that is, all positive instances are correctly

classified, and no negative instances are misclassified. Alternatively, the classifier

that misclassifies all instances would have a single point at

(

1

,

0

)

.

While

(

0

,

1

)

represents the ideal classifier and

(

1

,

0

)

represents its complement,

in ROC space, the line

y

=

x

represents a random classifier, that is, a classifier

that applies a random prediction to each instance. This gives a trivial lower bound

in ROC space for any classifier. An ROC curve is said to “dominate” another

ROC curve if, for each FPR, it offers a higher TPR. By analyzing ROC curves,

one can determine the best classifier for a specific FPR by selecting the classifier

with the best corresponding TPR.

In order to generate an ROC curve, each point is generated by moving the deci-

sion boundary for classification. That is, points nearer to the left in ROC space

are the result of requiring a higher threshold for classifying an instance as positive

instance. This property of ROC curves allows practitioners to choose the deci-

sion threshold that gives the best TPR for an acceptable FPR (Neyman-Pearson

method) [33].

The ROC convex hull can also provide a robust method for identifying poten-

tially optimal classifiers [34]. Given a set of ROC curves, the ROC convex hull

is generated by selecting only the best point for a given

FPR

. This is advanta-

geous, since, if a line passes through a point on the convex hull, then there is

Search WWH ::

Custom Search