Information Technology Reference

In-Depth Information

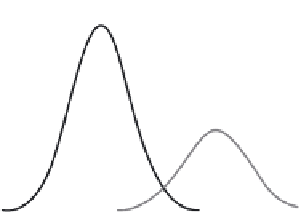

Majority-class distribution

Minority-class distribution

Virtual instances created by SMOTE

Virtual instances created by V

IRTUAL

M

arg

in

M

argi

n

(a)

(b)

Figure 6.7

Comparison of oversampling the minority class with SMOTE and VIRTUAL.

(a) Oversampling with SMOTE and (b) oversampling with VIRTUAL.

number of virtual instances created for a real positive support vector in each

iteration,

|

SV (

+

)

|

is the number of positive support vectors, and

C

is the cost of

finding k nearest neighbors. The computation complexity of SMOTE is

O(

X

R

·

v

)

, where

X

R

is the number of positive training instances.

depends on the

approach for finding

k

nearest neighbors. The naive implementation searches all

N

training instances for the nearest neighbors and thus

·

C

C

C

=

kN

. Using advanced

data structure such as kd-tree,

C

=

k

log

N

.Since

|

SV (

+

)

|

is typically much less

than

X

R

, VIRTUAL incurs lower computation overhead than SMOTE. Also,

with fewer virtual instances created, the learner is less burdened with VIRTUAL.

We demonstrate with empirical results that the virtual instances created with

VIRTUAL are more informative and the prediction performance is also improved.

6.4.3 Experiments

We conduct a series of experiments on Reuters-21578 and four UCI datasets

to demonstrate the efficacy of VIRTUAL. The characteristics of the datasets are

detailed in [22]. We compare VIRTUAL with two systems, AL and SMOTE. AL

adopts the traditional AL strategy without preprocessing or creating any virtual

instances during learning. SMOTE, on the other hand, preprocesses the data by

creating virtual instances before training and uses RS in learning. Experiments

elicit the advantages of adaptive virtual sample creation in VIRTUAL.

Figures 6.8 and 6.9 provide details on the behavior of the three algorithms,

SMOTE, AL, and VIRTUAL. For the Reuters datasets (Fig. 6.9), note that in all

the 10 categories, VIRTUAL outperforms AL in g-means metric after saturation.

The difference in performance is most pronounced in the more imbalanced cate-

gories, for example,

corn

,

interest

,and

ship

. In the less imbalanced datasets such

as

acq

and

earn

, the difference in

g

-means of both methods is less noticeable.

The

g

-means of SMOTE converges much slower than both AL and VIRTUAL.

Search WWH ::

Custom Search