Database Reference

In-Depth Information

MRQL queries to an algebra that is then translated to physical plans using cost-based

optimizations. In particular, the query plans are represented trees that are evaluated

using a plan interpreter where each physical operator is implemented with a single

MapReduce job that is parameterized by the functional parameters of the physical

operator. The data fragmentation technique of MRQL is built on top of the general

Hadoop XML input format, which is based on a single XML tag name. Hence, given

a data split of an XML document, Hadoop's input format allows reading the docu-

ment as a stream of string fragments, so that each string will contain a single com-

plete element that has the requested XML tag name.

ChuQL

[80] is another language

that has been proposed to support distributed XML processing using the MapReduce

framework. It presents a MapReduce-based extension for the syntax, grammar, and

semantics of

XQuery

[21], the standard W3C language for querying XML docu-

ments. In particular, the ChuQL implementation takes care of distributing the com-

putation to multiple XQuery engines running in Hadoop nodes, as described by one

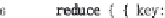

or more ChuQL MapReduce expressions. Figure 2.18 illustrates the representation of

the

word count

example program in the ChuQL language using its extended expres-

sions where the

MapReduce

expression is used to describe a MapReduce job. The

input

and

output

clauses are, respectively, used to read and write onto HDFS. The

rr

and

rw

clauses are, respectively, used for describing the record reader and writer.

The

map

and

reduce

clauses represent the standard map and reduce phases of the

framework where they process XML values or key/value pairs of XML values to

match the MapReduce model, which are specified using XQuery expressions.

Some research efforts have been proposed for achieving scalable RDF processing

using the MapReduce framework.

PigSPARQL

[118] is a system that has been intro-

duced to process SPARQL queries using the MapReduce framework by translating

them into

Pig Latin

programs where each Pig Latin program is executed by a series

of MapReduce jobs on a Hadoop cluster. Myung et al. [105] have presented a pre-

liminary algorithm for SPARQL graph pattern matching by adopting the traditional

multiway join of the RDF triples and selecting a good join-key to avoid unnecessary

iterations. Husain et al. [72] have described a storage scheme for RDF data using

HDFS where the input data are partitioned into multiple files using two main steps:

(1) The

Predicate Split

, which partitions the RDF triples according to their predi-

cates. (2) The

Predicate Object Split

(POS), which uses the explicit type information

in the RDF triples to denote that a resource is an instance of a specific class while the

remaining predicate files are partitioned according to the type of their objects. Using

summary statistics for estimating the selectivities of join operations, the authors

FIGURE 2.18

The word count example program in ChuQL. (From S. Khatchadourian

et al., Having a ChuQL at XML on the cloud, in

AMW

, 2011.)

Search WWH ::

Custom Search