Database Reference

In-Depth Information

and consequently performance. Under edge consistency, the update function at a

vertex has an exclusive read-write access to its vertex and adjacent edges, but only a

read access to adjacent vertices. Clearly, this relaxes consistency and enables a supe-

rior leverage of parallelism. Finally, under vertex consistency, the update function at

a vertex has an exclusive write access to only its vertex, hence, allowing all update

functions at all vertices to run simultaneously. Obviously, this provides the maxi-

mum possible parallelism but, in return, the most relaxed consistency. GraphLab

allows users to choose whatever consistency model they find convenient for their

applications.

1.5.2.2 The Message-Passing Programming Model

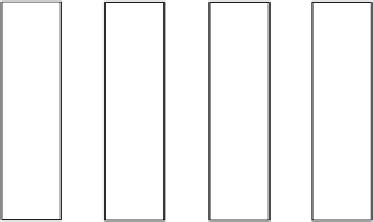

In the message-passing programming model, distributed tasks communicate by send-

ing and receiving messages. In other words, distributed tasks do not share an address

space at which they can access each other's memories (see Figure 1.6). Accordingly,

the abstraction provided by the message-passing programming model is similar to

that of processes (and not threads) in operating systems. The message-passing pro-

gramming model incurs communication overheads (e.g., variable network latency,

potentially excessive data transfers) for explicitly sending and receiving messages

that contain data. Nonetheless, the explicit sends and receives of messages serve in

implicitly synchronizing the sequence of operations imposed by the communicat-

ing tasks. Figure 1.7 demonstrates an example that transforms the same sequential

program shown in Figure 1.5a into a distributed program using message passing.

Initially, it is assumed that only a main task with id = 0 has access to arrays

b

and

c

. Thus, assuming the existence of only two tasks, the main task first sends parts of

the arrays to the other task (using an explicit send operation) to evenly split the work

among the two tasks. The other task receives the required data (using an explicit

receive operation) and performs a local sum. When done, it sends back its local sum

to the main task. Likewise, the main task performs a local sum on its part of data and

collects the local sum of the other task before aggregating and printing a grand sum.

S1

S1

S1

S1

T1

T2

T3

T4

S2

S2

S2

S2

Node 1

ode 2

Node 3

Node 4

Message passing over the network

FIGURE 1.6

Tasks running in parallel using the message-passing programming model

whereby the interactions happen only via sending and receiving messages over the network.

Search WWH ::

Custom Search